YMYL in the Age of AI: How to Build Trust for Health, Finance, and Legal Content

Last Updated: February 28, 2025

When someone asks an AI about their tax situation, their medical symptoms, or their legal rights, the stakes are high. A wrong answer could cost money, health, or freedom.

AI systems know this. They apply extra scrutiny to what Google calls YMYL—Your Money or Your Life—content. These are topics where bad information could genuinely harm users.

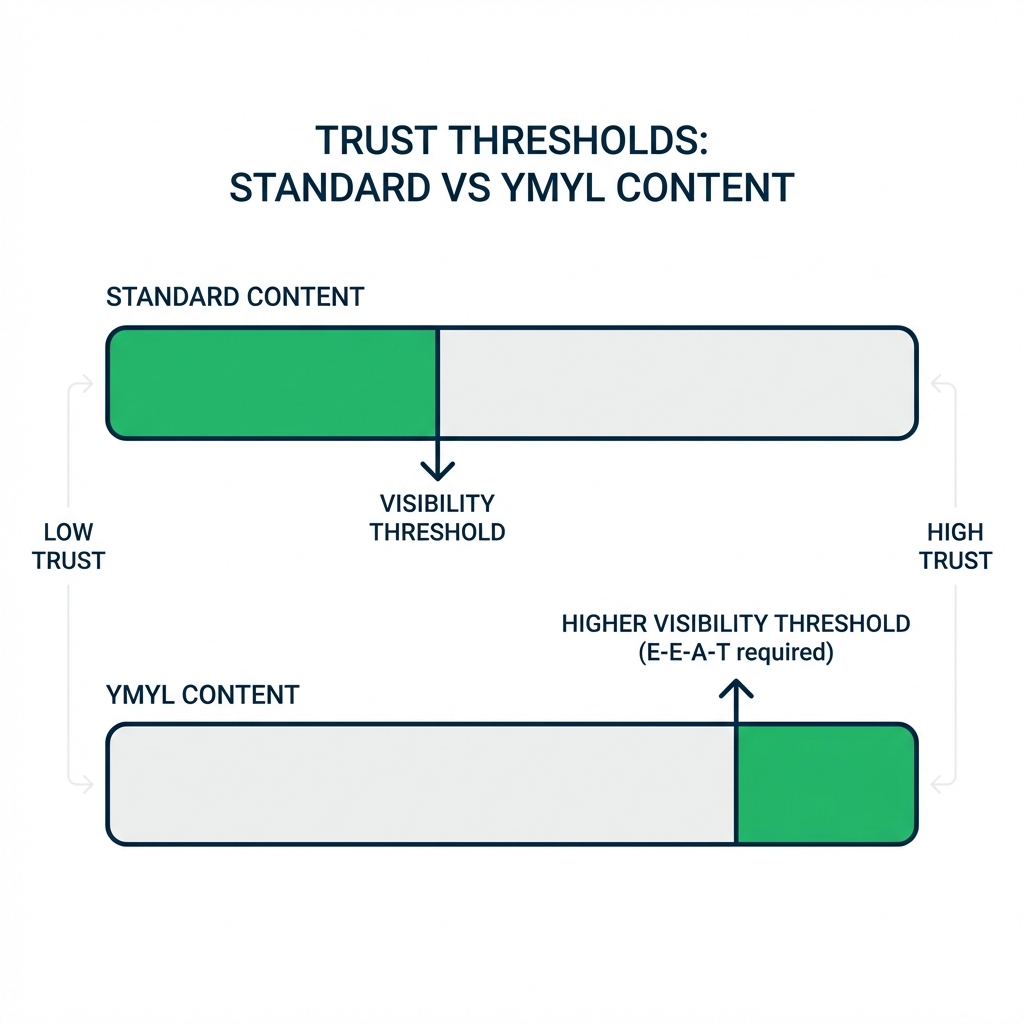

If you produce content in health, finance, legal, safety, or other high-stakes categories, AI systems are evaluating you more skeptically than they evaluate a blog about coffee recipes. They're looking for signals of expertise, authority, and trustworthiness (E-E-A-T) before recommending your content.

This creates both a challenge and an opportunity. The challenge: higher bar for visibility. The opportunity: once you clear that bar, you're trusted deeply, and AI will recommend you confidently.

Here's how to build the trust signals YMYL content needs for AI visibility.

Table of Contents

- What Is YMYL and Why AI Cares

- How AI Evaluates Trust for YMYL Content

- E-E-A-T for AI: Evidence of Expertise

- The Consensus Authority Principle

- YMYL-Specific Schema Strategies

- Content Approaches for High-Trust Topics

- Industry-Specific YMYL Guidelines

- Building Author Authority

- Common YMYL Mistakes to Avoid

- FAQ

What Is YMYL and Why AI Cares

YMYL Categories

YMYL content covers topics where misinformation could harm users:

| Category | Examples |

|---|---|

| Health & Medical | Symptoms, treatments, medications, diagnoses |

| Financial | Investing, taxes, insurance, banking |

| Legal | Rights, regulations, legal advice |

| Safety | Product safety, emergency procedures |

| News/Current Events | Political news, civic information |

| Groups of People | Information about protected groups |

Why AI Is Extra Cautious

AI systems (especially post-ChatGPT safety updates) have been trained to:

- Avoid harm: Don't give advice that could hurt users

- Defer to experts: Prefer credentialed sources

- Acknowledge uncertainty: Hedge when unsure

- Recommend consultation: Suggest seeing professionals

For YMYL topics, AI's default is conservative. It won't confidently recommend your financial advice unless it's very sure you're trustworthy.

The YMYL Trust Threshold

The bar is higher. Content that would be fine for general topics might not clear the threshold for YMYL.

How AI Evaluates Trust for YMYL Content

AI systems look for specific trust signals:

Primary Trust Signals

| Signal | How AI Detects It | Weight for YMYL |

|---|---|---|

| Author credentials | Schema, bios, external verification | Very High |

| Source citations | Links to medical/legal/financial authorities | Very High |

| Institutional backing | Published by known organizations | High |

| Expert consensus | Claims align with authoritative sources | Very High |

| Recency | Content freshness | High |

| Review/edit process | Medical/legal review disclosures | High |

Secondary Trust Signals

| Signal | How AI Detects It | Weight |

|---|---|---|

| Third-party mentions | Your org cited by authoritative sources | Medium |

| Professional associations | Verified affiliations | Medium |

| Domain authority | General site trust signals | Medium |

| User engagement | Comments, shares, feedback | Low |

Platforms like AICarma track Sentiment scores alongside Visibility, helping you understand how AI perceives your YMYL content's trustworthiness across different models.

What AI Is Checking

For health content, AI essentially asks:

- Is this written or reviewed by a medical professional?

- Does it align with medical consensus (CDC, WHO, NIH)?

- Are claims supported by citations?

- Is there appropriate hedging for uncertainty?

For financial content:

- Is this from a licensed financial advisor or established institution?

- Does it align with regulatory guidance (SEC, IRS)?

- Are appropriate disclaimers present?

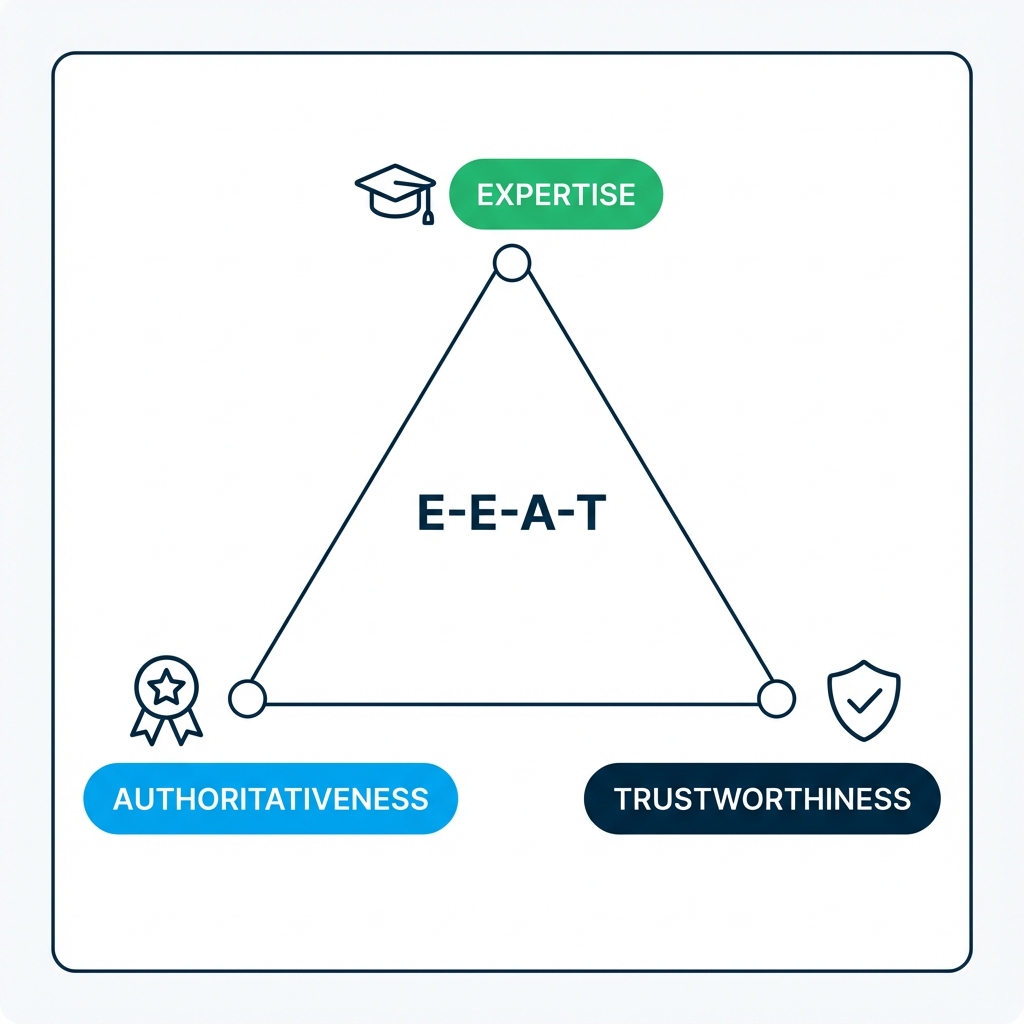

E-E-A-T for AI: Evidence of Expertise

Google's E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) framework applies to AI as well.

Experience

Show that authors have firsthand experience:

| Experience Signal | How to Demonstrate |

|---|---|

| "I've treated this condition for 15 years" | First-person expertise statements |

| Case studies from practice | Real-world examples |

| Personal anecdotes with lessons | Narrative evidence |

Expertise

Prove formal qualifications:

| Expertise Signal | How to Demonstrate |

|---|---|

| MD, JD, CPA, CFP credentials | Clear author bios |

| Institution affiliations | Verified connections |

| Published research | Links to publications |

| Speaking engagements | Conference/media appearances |

Authoritativeness

Show recognition by others:

| Authority Signal | How to Demonstrate |

|---|---|

| Cited by other authorities | Backlinks from .gov, .edu |

| Media coverage | Press mentions |

| Awards/recognition | Industry accolades |

| Peer endorsements | Quotes from other experts |

Trustworthiness

Demonstrate reliability:

| Trust Signal | How to Demonstrate |

|---|---|

| Transparent disclosure | Clear about conflicts of interest |

| Editorial process | "Reviewed by Dr. Smith, MD" |

| Source citations | Link to primary sources |

| Correction policy | How you handle errors |

The Consensus Authority Principle

AI systems for YMYL topics are trained to align with expert consensus. This has profound implications.

What Consensus Authority Means

AI doesn't just look at your credentials—it checks if your claims match what authoritative bodies say.

| Your Claim | Authoritative Consensus | AI Behavior |

|---|---|---|

| Matches | Aligned | High confidence, will cite |

| Extends | Compatible | Moderate confidence |

| Contradicts | Conflicting | Will not cite, may warn |

Why This Matters

If you're writing about health and your advice contradicts CDC guidance, AI will either ignore you or actively contradict you. This isn't about censorship—it's about the AI protecting users from potential harm.

Working Within Consensus

This doesn't mean you can't have unique perspectives. But structure content appropriately:

For consensus-aligned content: Present it confidently with citations.

For emerging/debated topics: Acknowledge the debate, cite multiple perspectives, avoid definitive claims.

For contrarian views: Present as one perspective, not "the truth." Acknowledge mainstream position first.

YMYL-Specific Schema Strategies

Schema markup takes on special importance for YMYL content.

Medical Content Schema

{

"@context": "https://schema.org",

"@type": "MedicalWebPage",

"mainContentOfPage": {

"@type": "WebPageElement",

"text": "..."

},

"lastReviewed": "2025-02-15",

"reviewedBy": {

"@type": "Person",

"name": "Dr. Jane Smith",

"jobTitle": "Board-Certified Dermatologist",

"affiliation": {

"@type": "Organization",

"name": "Stanford Health Care"

}

}

}

Author Credentials Schema

{

"@type": "Person",

"name": "Dr. John Smith",

"jobTitle": "Certified Financial Planner",

"hasCredential": [

{

"@type": "EducationalOccupationalCredential",

"credentialCategory": "Certification",

"name": "CFP"

}

],

"alumniOf": {

"@type": "EducationalOrganization",

"name": "Wharton School of Business"

},

"memberOf": {

"@type": "Organization",

"name": "Financial Planning Association"

}

}

Citation Schema

Link to authoritative sources with explicit markup:

{

"@type": "Article",

"citation": [

{

"@type": "CreativeWork",

"name": "CDC Guidelines on...",

"url": "https://www.cdc.gov/..."

}

]

}

Content Approaches for High-Trust Topics

Structure for YMYL Credibility

| Element | Purpose | Example |

|---|---|---|

| Author byline | Establish credentials | "By Dr. Jane Smith, MD" |

| Reviewer note | Show expert validation | "Medically reviewed by..." |

| Date + update | Show recency | "Published: Feb 2025 |

| Disclaimers | Manage expectations | "Not medical advice..." |

| Source citations | Prove basis | Linked references throughout |

Content Tone for YMYL

Avoid:

- Absolute claims ("This will cure...")

- Fearmongering ("You must do this or else...")

- Dismissing mainstream guidance

Prefer:

- Hedged language ("Research suggests...")

- Encouraging professional consultation

- Acknowledging complexity

The "Talk to Your Doctor" Requirement

AI systems are trained to recommend professional consultation for YMYL topics. Your content should too:

| Too Aggressive | Appropriate |

|---|---|

| "Take this supplement to cure your condition" | "Discuss this supplement with your doctor" |

| "Follow this tax strategy" | "Consider discussing this strategy with a tax professional" |

| "This is definitely illegal" | "Consult an attorney for advice on your specific situation" |

Industry-Specific YMYL Guidelines

Health & Medical

| Must Have | How to Implement |

|---|---|

| Medical review | "Reviewed by Dr. X, MD" + Schema |

| Source citations | Link to NIH, CDC, peer-reviewed journals |

| Updated dates | Visible last-reviewed date |

| Appropriate scope | Don't diagnose; describe symptoms |

| Professional referral | "See your doctor if..." |

Financial

| Must Have | How to Implement |

|---|---|

| Financial credentials | CFA, CFP, CPA designations visible |

| Regulatory awareness | Mention relevant regulations (SEC, IRS) |

| Risk disclosure | "Past performance doesn't guarantee..." |

| Personalization caveat | "Depends on your situation" |

| Professional referral | "Consult a financial advisor" |

Legal

| Must Have | How to Implement |

|---|---|

| Attorney review | "Reviewed by licensed attorney" |

| Jurisdiction notes | "Laws vary by state" |

| Non-advice disclaimer | "Not legal advice; consult an attorney" |

| Recency | Legal info goes stale quickly |

| Complexity acknowledgment | "This is a complex area of law" |

Building Author Authority

For YMYL, author credibility is paramount.

Author Page Requirements

Each author should have a dedicated page with:

| Element | Purpose |

|---|---|

| Full credentials | Degrees, certifications, licenses |

| Experience | Years of practice, specializations |

| Affiliations | Hospitals, firms, associations |

| Publications | Books, research, media appearances |

| Photo | Humanizes and verifies |

| Social proof | Awards, speaking, recognition |

Author Schema

Implement detailed Schema on author pages:

{

"@type": "ProfilePage",

"mainEntity": {

"@type": "Person",

"name": "Dr. Sarah Johnson",

"jobTitle": "Cardiologist",

"description": "Board-certified cardiologist with 20 years of experience...",

"image": "https://example.com/dr-johnson.jpg",

"hasCredential": [...],

"alumniOf": [...],

"memberOf": [...],

"sameAs": [

"https://linkedin.com/in/dr-sarah-johnson",

"https://doximity.com/dr-sarah-johnson",

"https://healthgrades.com/dr-sarah-johnson"

]

}

}

Linking Authors to Content

Every YMYL article should clearly link to the author page:

| Location | Implementation |

|---|---|

| Article byline | Linked author name |

| Article Schema | Author property with @id reference |

| Reviewer callout | If different from author |

Common YMYL Mistakes to Avoid

Mistake 1: Missing Author Credentials

Problem: Anonymous or under-credentialed authors on YMYL content. Fix: Every article needs a qualified author with visible credentials.

Mistake 2: Outdated Information

Problem: Medical or legal advice from 2019 still live in 2025. Fix: Review and update YMYL content regularly. Show "Last reviewed" dates.

Mistake 3: No Source Citations

Problem: Claims without references to authoritative sources. Fix: Link to CDC, NIH, SEC, authoritative legal sources. Make citations visible.

Mistake 4: Overconfident Claims

Problem: "This treatment works" instead of "Research suggests this treatment may help" Fix: Use appropriate hedging for complexity and uncertainty.

Mistake 5: Ignoring Mainstream Consensus

Problem: Presenting fringe views as established fact. Fix: Acknowledge mainstream consensus, present alternative views as perspectives.

Mistake 6: Missing Disclaimers

Problem: No "not a substitute for professional advice" language. Fix: Include appropriate disclaimers, especially for medical/legal/financial.

FAQ

Does E-E-A-T apply to AI the same as Google?

Yes, the principles are the same. AI systems (especially those from Google) are trained to prefer content that demonstrates Experience, Expertise, Authoritativeness, and Trustworthiness. The signals may be weighted differently, but the framework applies.

Can I write YMYL content without professional credentials?

Technically yes, but visibility will be limited. For best results: have credentialed experts write or review content, clearly disclose your role, cite authoritative sources extensively, and be appropriately humble about the limits of non-professional advice.

How strict are AI safety filters for YMYL?

Very strict. AI models are specifically fine-tuned to be cautious about health, financial, and legal advice. They'll often defer to professionals ("consult your doctor"), refuse to give specific advice, or hedge significantly. Your content needs to pass similar safetly thresholds to be cited.

Do disclaimers actually help with AI visibility?

Disclaimers signal appropriate scope awareness. They won't make bad content rank, but they demonstrate trustworthiness. Include them prominently for legal protection and trust signaling.

How do I update old YMYL content?

Audit for accuracy (do claims still hold?), update citations to current sources, add "Last reviewed" dates, have a credentialed expert re-review, and update Schema markup to reflect fresh review date.