The Three Internets: Optimizing for Robots, Agents, and Humans in 2026

Last Updated: February 15, 2025

Let me ask you a question that might sting a little: Who is your website really built for?

If you answered "our customers," you're only one-third correct. And that missing two-thirds might be why your organic traffic is plateauing, why your brand doesn't appear in ChatGPT recommendations, and why your competitors seem to be everywhere while you're struggling to be seen.

Here's the uncomfortable truth that forward-thinking marketers are waking up to in 2026: Your website doesn't serve one audience. It serves three. We call this framework "The Three Internets," and understanding it is the difference between thriving in the AI age and slowly fading into digital obscurity.

Table of Contents

- What Are The Three Internets?

- The Internet of Robots: Your Foundation Layer

- The Internet of Agents: The New Middlemen

- The Human Internet: Where Emotion Still Wins

- Why Machine-First Design Actually Helps Humans

- The Three Internets Optimization Matrix

- Real-World Example: How One SaaS Company 3x'd Their AI Visibility

- Your 30-Day Action Plan

- FAQ

What Are The Three Internets?

The concept of "The Three Internets" provides a strategic framework for understanding modern digital visibility. Think of it like this: imagine your website as a building with three different entrances, each designed for a completely different type of visitor.

The Hierarchy of Digital Access

| Internet Layer | Primary Users | Key Optimization Focus |

|---|---|---|

| Robots | Googlebot, GPTBot | Structure, speed, clarity |

| Agents | ChatGPT, Gemini, Perplexity | Facts, context, citations |

| Humans | Your actual customers | Solutions, trust, emotion |

Most companies pour 95% of their resources into the Human Internet while completely ignoring the two layers that actually control access to those humans. It's like building a beautiful store but forgetting to put it on any map.

The Hierarchy of Digital Access

Here's what most marketers don't understand: these three internets are arranged in a hierarchy.

- Robots must crawl and index your content first

- Agents then retrieve and synthesize that content for users

- Humans finally see the filtered, summarized, recommended version

If you fail at layer one, layers two and three never happen. If you fail at layer two, humans increasingly won't find you because they're asking AI instead of Googling.

The Internet of Robots: Your Foundation Layer

Let's be brutally honest: robots don't care about your clever headlines, your beautiful photography, or your emotional brand story. They care about one thing: Can I understand what this page is about, quickly and accurately?

The Language of Robots

Robots speak in structured data. While humans read your prose, robots read your Schema markup. Think of Schema as a translation layer between human language and machine language.

Here's what a robot "sees" when it visits your product page:

{

"@type": "Product",

"name": "AI Analytics Pro",

"description": "Real-time monitoring of brand mentions across ChatGPT, Gemini, and Claude",

"offers": {

"price": "299",

"priceCurrency": "USD"

}

}

Without this structured data, the robot has to guess what your page is about by parsing your HTML. And guess what? Robots are bad at guessing. They're also impatient—if your page takes more than 3 seconds to load, they might not wait around.

The Three Critical Robot Optimization Areas

1. Crawl Efficiency

Your robots.txt file is your bouncer at the door. It tells robots which rooms they can enter and which are off-limits. In 2026, you need to think carefully about which AI bots you're allowing in:

| Bot Name | Owner | Purpose | Should You Allow? |

|---|---|---|---|

| GPTBot | OpenAI | Training data | Yes, for brand pages |

| ChatGPT-User | OpenAI | Live browsing | Definitely yes |

| Google-Extended | Gemini training | Yes for visibility | |

| ClaudeBot | Anthropic | Claude training | Yes for visibility |

| CCBot | Common Crawl | Dataset building | Depends on your IP concerns |

2. Site Architecture

Robots think in links. If your most important pages are buried 6 clicks deep, robots assign them lower importance. The rule of thumb: every important page should be reachable in 3 clicks or fewer from your homepage.

3. Structured Data Depth

Don't just add basic Schema. Layer it. Nest it. Connect it. Your Organization schema should link to your Product schema, which links to your Review schema. This creates a rich entity graph that both robots and agents can traverse.

The Internet of Agents: The New Middlemen

This is where things get interesting—and where most companies are failing spectacularly.

Autonomous AI agents (like ChatGPT, Perplexity, and Gemini) don't just index your content like traditional robots. They understand it, synthesize it, and recommend it to humans. They're the new middlemen between your website and your customers.

When a user asks Perplexity: "What's the best CRM for a 50-person remote team?", the agent doesn't just list 10 blue links. It analyzes dozens of sources, forms an opinion, and makes a specific recommendation.

If you're not in that recommendation, you might as well not exist.

What Agents Actually Want

Agents are trying to answer questions accurately while minimizing hallucination risk. They prefer sources that offer:

| Agent Preference | Why It Matters | How to Optimize |

|---|---|---|

| Semantic Clarity | Reduces misinterpretation | Clear, jargon-free language |

| Verifiable Facts | Reduces hallucination risk | Cite sources, include data |

| Transactional Capability | Enables action-taking | APIs, booking widgets, clear pricing |

| High Consensus | Builds confidence | Third-party validation, reviews |

The Visibility Score: Your New North Star Metric

In traditional SEO, you tracked rankings. In agent optimization, you track your Visibility Score—the percentage of relevant prompts where your brand appears in the AI's response.

Here's a framework for thinking about it:

- 0-10% visibility: You're invisible. Urgent action needed.

- 10-30% visibility: You exist but aren't trusted. Build entity strength.

- 30-50% visibility: You're competitive. Focus on differentiation.

- 50%+ visibility: You're dominating. Maintain and defend.

Most brands are shocked to discover they're in the 0-10% category, even if they rank #1 on Google for their main keywords. That's the paradox of the Three Internets: traditional SEO success doesn't automatically translate to agent visibility.

The Human Internet: Where Emotion Still Wins

Don't mistake our emphasis on machines for dismissing humans. Quite the opposite—by optimizing for robots and agents, you're essentially building a VIP fast lane that delivers perfectly qualified prospects to your human experience.

What Humans Want That Machines Can't Provide

Ironically, as AI generates more content, genuine human signals become more valuable:

- Authentic Stories: "How we built this" narratives that AI can't fabricate

- Original Research: Data and insights that don't exist elsewhere

- Community: Forums, comments, user-generated content

- Personality: A voice that's distinctly yours

The brands that win the Human Internet in 2026 are those that double down on what makes them irreplaceable. AI can summarize your competitor's features, but it can't replicate your founder's origin story or your community's passion.

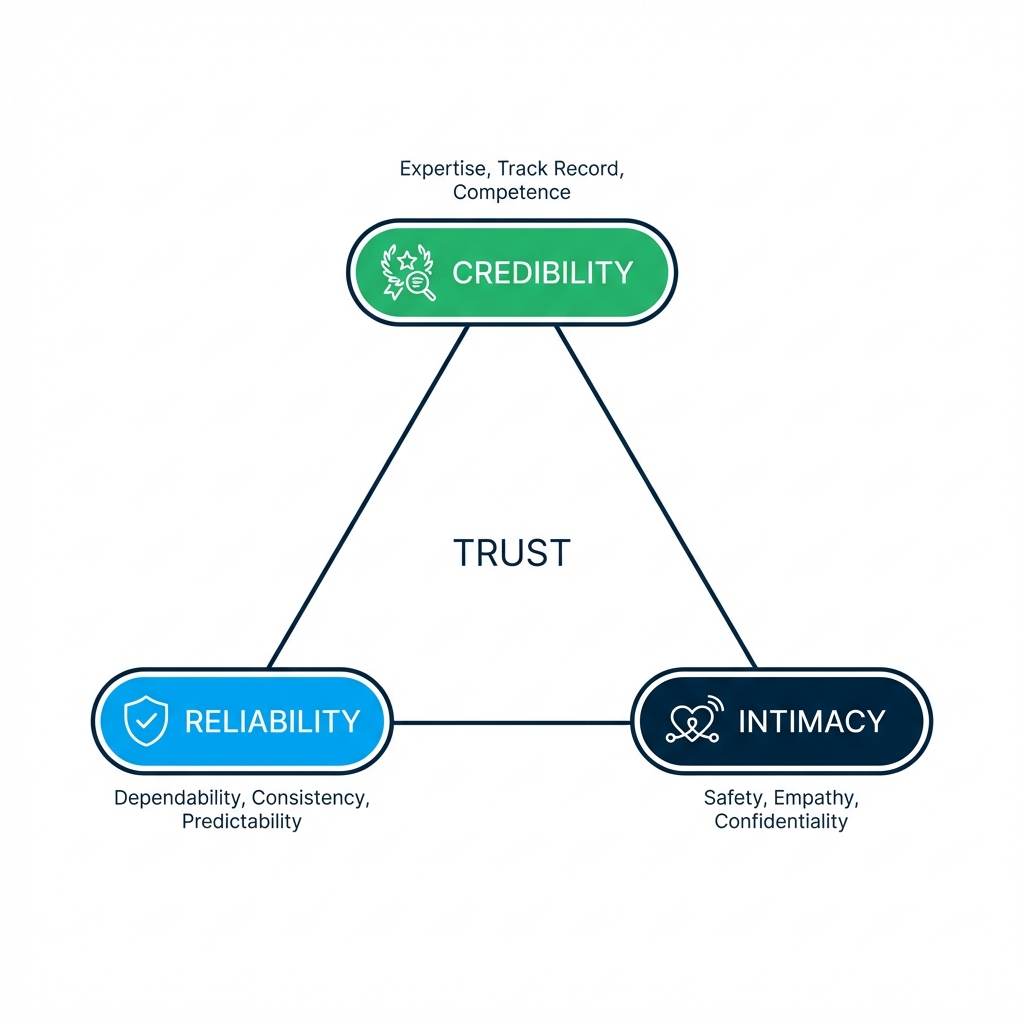

The Trust Triangle

Human conversion depends on three factors forming a triangle:

- Credibility: Do you know what you're talking about? (Expertise signals)

- Reliability: Will you deliver on promises? (Social proof, reviews)

- Intimacy: Do you understand me? (Personalization, empathy)

AI agents can verify your credibility by checking facts. They can assess reliability through review aggregation. But intimacy? That's where human-crafted experience still reigns supreme.

Why Machine-First Design Actually Helps Humans

Here's the counterintuitive insight that changes everything: optimizing for machines makes your human experience better, not worse.

Think about it:

- Structured data forces you to be clear about what you offer

- Fast page speeds improve human patience and conversion

- Logical site architecture helps humans navigate too

- Semantic clarity makes your content more readable

The company that excels at machine optimization often provides a superior human experience as a byproduct. It's not a trade-off—it's a multiplier.

The Three Internets Optimization Matrix

Here's a practical matrix for auditing your current state:

| Optimization Area | Robot Internet | Agent Internet | Human Internet |

|---|---|---|---|

| Speed | Core Web Vitals | API response time | Page load perception |

| Structure | Schema markup | RAG-friendly chunks | Clear navigation |

| Content | Machine-readable | Citable facts | Emotional storytelling |

| Trust | SSL, uptime | Entity verification | Reviews, testimonials |

| Action | Crawl paths | Transactional APIs | CTAs, forms |

Rate yourself 1-5 in each cell. Any cell below 3 is a priority fix.

Real-World Example: How One SaaS Company 3x'd Their AI Visibility

Let me tell you about a B2B software company (name redacted for confidentiality) that came to us with a frustrating problem. They ranked #1 on Google for "project management software alternatives" but were invisible in ChatGPT.

The Diagnosis:

- Their robots.txt was blocking GPTBot (accidental from a previous "security" update)

- Their pricing was hidden behind a "Contact Sales" form (agents hate this)

- Their Schema markup was minimal—just Organization, no Product or FAQ

- Their content was fluffy marketing speak, not fact-dense comparison data

The Prescription:

- Fixed robots.txt to allow AI crawlers

- Published clear pricing pages with

Offerschema - Created detailed comparison tables with structured markup

- Added FAQ sections with FAQ schema

- Got listed on G2, Capterra, and major software directories

The Results (90 days later):

- AI Visibility Score: 4% → 47%

- Branded search volume increased 35% (people heard about them from AI)

- Demo requests from AI-referred traffic: 3.2x higher close rate

The magic wasn't any single tactic. It was systematic optimization across all three internets.

Your 30-Day Action Plan

Ready to optimize for all three internets? Here's your roadmap:

Week 1: Robot Foundation

- [ ] Audit your robots.txt for AI bot permissions

- [ ] Test your Core Web Vitals (target: all green)

- [ ] Implement Organization and Product schema

- [ ] Create or update your XML sitemap

Week 2: Agent Optimization

- [ ] Identify your top 10 "money prompts" (what should users ask to find you?)

- [ ] Create FAQ pages targeting those prompts

- [ ] Add comparison tables with structured data

- [ ] Publish your pricing publicly (no "Contact Sales" black boxes)

Week 3: Human Polish

- [ ] Write or update your founder story

- [ ] Collect and display customer testimonials

- [ ] Create case studies with specific metrics

- [ ] Design clear calls-to-action for every page

Week 4: Measurement & Iteration

- [ ] Set up AI visibility monitoring (tools like AICarma)

- [ ] Establish baseline metrics across all three layers

- [ ] Identify your lowest-scoring areas

- [ ] Prioritize improvements for month 2

FAQ

Can I ignore the Internet of Agents and focus on traditional SEO?

You can, but you'll be ignoring a growing percentage of discovery. Gartner predicts that by 2027, 50% of all product research will begin with an AI assistant rather than a search engine. Ignoring agents means conceding half the market to competitors who take it seriously.

How do I check my visibility in the Internet of Agents?

You can use platforms like AICarma to track your brand's presence in AI responses. Traditional rank trackers are completely blind to this layer—they only measure what they've always measured (Google rankings), missing the new discovery channels entirely.

Is structured data really that important?

Yes. Structured data is the only way to communicate unambiguously with LLMs. Without it, you're relying on AI to probabilistically "guess" what your content means. Sometimes it guesses right. Often it doesn't. With Schema markup, you're giving the machine explicit instructions rather than hoping for the best.

What's the ROI of optimizing for all three internets?

Based on our analysis, companies that systematically optimize for all three internets see:

- 2-4x improvement in AI visibility scores

- 25-40% reduction in invisible brand syndrome

- 15-30% increase in qualified traffic (higher intent visitors)

- Better conversion rates (because visitors are pre-qualified by AI recommendations)

Where should I start if I'm overwhelmed?

Start with robots.txt. It takes 5 minutes to check and update. Then move to basic Schema markup. These two fixes alone can move the needle significantly, and they're the foundation everything else builds on.