SaaS GEO Playbook: How to Get Your Software Recommended by ChatGPT

Last Updated: April 12, 2025

"What's the best CRM for a 50-person sales team?" "Which project management tool works best for remote agencies?" "Recommend a marketing automation platform for e-commerce businesses."

These aren't hypothetical prompts—they're real queries that happen thousands of times daily across ChatGPT, Claude, Gemini, and Perplexity.

And for each query, AI systems are recommending a handful of winners. If you're not among them, you're invisible to a rapidly growing channel of product discovery.

For B2B SaaS companies, this is an existential challenge. Your target customers are increasingly asking AI for software recommendations before they ever Google "best [category] software." The competitive dynamics have shifted, and most SaaS companies are completely unprepared.

This playbook provides the tactical framework for SaaS companies to achieve and maintain strong AI visibility.

Table of Contents

- Why SaaS Is Uniquely Affected

- Understanding AI Recommendation Logic for Software

- The SaaS GEO Audit: Where Do You Stand?

- Pillar 1: Technical Foundation

- Pillar 2: Comparison Content Domination

- Pillar 3: Review Platform Presence

- Pillar 4: Entity and Authority Building

- Pillar 5: Feature-Based Positioning

- The 90-Day SaaS GEO Sprint

- FAQ

Why SaaS Is Uniquely Affected

B2B SaaS faces specific challenges in the AI visibility landscape:

Challenge 1: Category Crowding

Most SaaS categories have dozens of competitors:

- CRM: 100+ options

- Project Management: 75+ options

- Marketing Automation: 50+ options

When a user asks "What's the best CRM?", AI can only mention 3-5 options. Everyone else is invisible.

Challenge 2: High-Consideration Purchase

SaaS is typically evaluated carefully:

- Long sales cycles

- Multiple stakeholders

- Migration costs

- Integration requirements

Users ask detailed questions. AI needs comprehensive information to recommend confidently.

Challenge 3: Rapid Feature Evolution

SaaS products evolve constantly. But AI training data is static. Your breakthrough feature from last quarter might not exist in ChatGPT's knowledge.

Challenge 4: Comparison-Heavy Evaluation

Users frequently ask comparison questions:

- "[Your software] vs [Competitor]"

- "Which is better: [A] or [B]?"

- "[Your software] alternatives"

If you don't control these comparisons, competitors (or neutral parties) do.

The SaaS AI Visibility Imperative

| Stage | Traditional Approach | AI-First Approach |

|---|---|---|

| Awareness | Paid ads, content marketing | AI visibility |

| Consideration | Demos, case studies | AI comparisons |

| Decision | Sales calls | AI recommendations |

SaaS companies that master AI visibility gain unfair advantage in an increasingly competitive market. For enterprise SaaS, this trend is accelerating the shift from traditional market research to AI model polling.

Understanding AI Recommendation Logic for Software

When AI recommends software, it's synthesizing multiple signals:

Primary Signals

| Signal | Source | Weight |

|---|---|---|

| Category association | Training data, Schema | High |

| Feature matching | Product docs, comparisons | High |

| Review sentiment | G2, Capterra, Reddit | High |

| Market position | Wikipedia, news, directories | Medium |

| Pricing clarity | Website, structured data | Medium |

| Recency | Recent mentions, RAG retrieval | Medium |

What AI "Looks For" in SaaS Recommendations

1. Is this software clearly categorized? (CRM, PM tool, etc.)

2. Does it match the user's specific needs? (team size, use case)

3. Is it well-reviewed by credible sources?

4. Is there sufficient information to recommend confidently?

5. What's the consensus across multiple sources?

The Confidence Threshold

AI will only recommend software it's confident about. Low confidence = vague mention or omission.

| Confidence Level | AI Behavior |

|---|---|

| High | "For your needs, I recommend [Product]" |

| Medium | "[Product] is one option to consider" |

| Low | Lists products without recommendation |

| Very Low | Omits product entirely |

Your goal: push your product above the confidence threshold.

The SaaS GEO Audit: Where Do You Stand?

Before optimizing, assess your current position:

Audit Step 1: Visibility Testing

Run these prompts across ChatGPT, Claude, Gemini:

| Prompt | Check For |

|---|---|

| "Best [your category] software" | Are you mentioned? Position? |

| "[Your product] vs [main competitor]" | Favorable comparison? |

| "[Your category] for [your ideal customer type]" | Are you matched to your ICP? |

| "What is [Your Product]?" | Accurate description? |

| "[Your product] pricing" | Does AI know your pricing? |

| "[Your product] pros and cons" | What weaknesses are cited? |

For systematic tracking, platforms like AICarma automate this audit across 10+ AI models, providing Visibility, Sentiment, and Ranking scores in a single dashboard.

Audit Step 2: Technical Check

| Factor | How to Check | Goal |

|---|---|---|

| robots.txt | yourdomain.com/robots.txt | AI bots allowed |

| Schema | Google Rich Results Test | SoftwareApplication schema |

| Page speed | PageSpeed Insights | Core Web Vitals green |

| Pricing visibility | Manual check | Public, machine-readable |

Audit Step 3: Authority Assessment

| Source | Have It? | Quality? |

|---|---|---|

| G2 profile | Yes/No | Reviews, accuracy |

| Capterra profile | Yes/No | Reviews, accuracy |

| Wikipedia | Yes/No | Article status |

| Crunchbase | Yes/No | Completeness |

| Industry press | Frequency | Recent coverage |

Audit Scorecard

Score yourself 1-5 on each factor. Anything below 3 is a priority fix.

| Factor | Score | Priority |

|---|---|---|

| Category visibility | /5 | |

| Competitor comparison wins | /5 | |

| Accurate AI description | /5 | |

| Technical foundation | /5 | |

| Review platform presence | /5 |

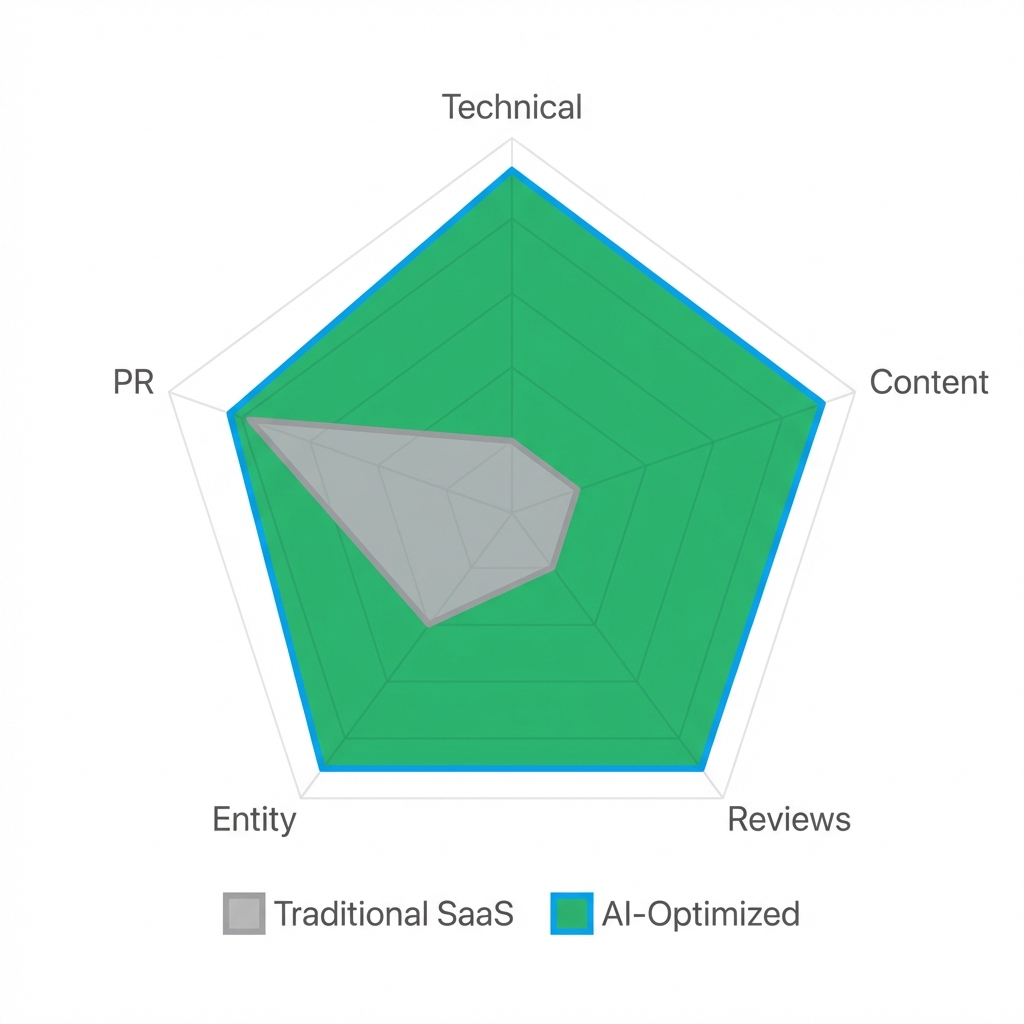

The SaaS Visibility Radar

Pillar 1: Technical Foundation

Technical optimization is the foundation everything builds on.

robots.txt Configuration

Ensure AI crawlers can access your public content:

User-agent: GPTBot

Allow: /

User-agent: ChatGPT-User

Allow: /

User-agent: ClaudeBot

Allow: /

User-agent: Google-Extended

Allow: /

See our complete robots.txt guide for detailed configuration.

SoftwareApplication Schema

Every SaaS needs proper Schema markup:

{

"@context": "https://schema.org",

"@type": "SoftwareApplication",

"name": "Your SaaS Product",

"applicationCategory": "BusinessApplication",

"applicationSubCategory": "CRM Software",

"operatingSystem": "Web Browser",

"offers": {

"@type": "AggregateOffer",

"lowPrice": "29",

"highPrice": "299",

"priceCurrency": "USD"

},

"aggregateRating": {

"@type": "AggregateRating",

"ratingValue": "4.7",

"reviewCount": "543"

},

"featureList": [

"Contact Management",

"Pipeline Tracking",

"Email Integration",

"Reporting & Analytics"

]

}

Pricing Transparency

This is crucial. "Contact Sales" kills AI visibility:

| Pricing Display | AI Impact |

|---|---|

| Public, specific | AI can cite and compare |

| "Starting at $X" | Partial—AI knows floor |

| "Contact for pricing" | AI cannot recommend confidently |

If you must have enterprise tiers that require sales, at least publish self-serve pricing. Example: "Teams: $49/user | Enterprise: Contact us"

Pillar 2: Comparison Content Domination

Users ask comparison questions constantly. Control the comparison narrative.

Creating Comparison Pages

For your top 5-10 competitors, create "[Your Product] vs [Competitor]" pages:

| Element | Include |

|---|---|

| Feature comparison table | Detailed, fair comparison |

| Pricing comparison | Both products' pricing |

| Use case recommendations | "Best for X vs Best for Y" |

| FAQ schema | Common comparison questions |

| User testimonials | From switchers if possible |

Comparison Content Guidelines

Be fair: Overtly biased comparisons hurt credibility. Acknowledge competitor strengths.

Be specific: "Better UI" is weak. "Drag-and-drop pipeline with 50% faster onboarding" is strong.

Be current: Outdated comparisons harm trust. Update when competitors change.

The "Alternatives" Page

Create a "[Your Category] Alternatives to [Popular Competitor]" page:

- Position yourself as the smart alternative

- Target users dissatisfied with market leaders

- Capture "alternatives to X" prompts

Pillar 3: Review Platform Presence

AI heavily weights review platforms—they're trusted, third-party sources.

Priority Platforms for SaaS

| Platform | Priority | Info Needed |

|---|---|---|

| G2 | Critical | Complete profile, reviews |

| Capterra | Critical | Complete profile, reviews |

| TrustRadius | High | Profile and reviews |

| GetApp | Medium | Profile |

| Software Advice | Medium | Profile |

Optimizing Your Profiles

| Element | Action |

|---|---|

| Product description | Clear, factual, keyword-rich |

| Category placement | Correct primary and secondary categories |

| Feature list | Complete and accurate |

| Pricing | Up-to-date, detailed tiers |

| Screenshots | Current, compelling |

| Vendor responses | Respond to all reviews professionally |

Review Strategy

| Tactic | Details |

|---|---|

| Volume | Aim for 100+ reviews on G2 |

| Recency | 10+ reviews in last 6 months |

| Quality | Detailed reviews > star-only |

| Balance | Mix of segments and use cases |

| Authenticity | Never fake—platforms detect, AI may too |

Reviews are training data. AI reads them to understand sentiment and use cases.

Pillar 4: Entity and Authority Building

Strong entity presence increases AI confidence:

Entity Essentials for SaaS

| Source | Action | Priority |

|---|---|---|

| Crunchbase | Complete profile, funding, team | Critical |

| LinkedIn Company | Updated, active | Critical |

| AngelList/Wellfound | If investor-relevant | High |

| Wikipedia | If meeting notability | High |

| Wikidata | Entry with properties | Medium |

Industry Authority

| Tactic | AI Impact |

|---|---|

| Analyst reports | Gartner, Forrester mentions carry weight |

| Industry awards | G2 badges, category wins |

| Conference keynotes | Video transcripts become training data |

| Podcast appearances | Transcripts are crawlable |

| Original research | Creates unique citation opportunities |

Founder/Team Visibility

For startups especially, founder visibility helps:

- LinkedIn thought leadership

- Personal brand Schema markup

- Media appearances

AI often associates products with people.

Pillar 5: Feature-Based Positioning

AI matches features to user needs. Make your features explicitly discoverable:

Feature Documentation

| Element | Purpose |

|---|---|

| Feature pages | Dedicated pages for each major feature |

| Integration directory | List all integrations explicitly |

| Use case pages | "[Product] for [Use Case]" pages |

| Industry pages | "[Product] for [Industry]" pages |

Semantic Feature Clustering

Think about how users ask for features:

| User Prompt | Your Content Should Target |

|---|---|

| "CRM with email integration" | Page about email integration |

| "Project management for agencies" | Page about agency use case |

| "Cheap alternative to Salesforce" | Pricing + comparison page |

| "HIPAA-compliant CRM" | Compliance page |

The Feature-Use Case Matrix

Map features to user intents:

| Feature | User Intent | Content Needed |

|---|---|---|

| Email automation | "CRM with email" | Feature page + use cases |

| Workflow builder | "Automate sales process" | How-to page + examples |

| API access | "Integrate with my stack" | API docs + integration page |

| Reporting | "Track sales performance" | Analytics feature page |

The 90-Day SaaS GEO Sprint

Here's a tactical implementation plan:

Days 1-30: Foundation

| Week | Focus | Actions |

|---|---|---|

| Week 1 | Technical | Fix robots.txt, implement Schema, audit page speed |

| Week 2 | Profiles | Complete G2, Capterra, Crunchbase profiles |

| Week 3 | Pricing | Make pricing public and structured |

| Week 4 | Audit | Run visibility tests, document baseline |

Days 31-60: Content

| Week | Focus | Actions |

|---|---|---|

| Week 5 | Comparisons | Create 3 comparison pages for top competitors |

| Week 6 | Comparisons | Create 2 more + alternatives page |

| Week 7 | Features | Create/optimize key feature pages |

| Week 8 | FAQs | Add FAQ schema to all major pages |

Days 61-90: Authority

| Week | Focus | Actions |

|---|---|---|

| Week 9 | Reviews | Launch review collection campaign |

| Week 10 | Press | Pursue 2-3 industry publication mentions |

| Week 11 | Community | Increase Reddit/forum presence authentically |

| Week 12 | Measure | Re-audit visibility, document improvements |

Expected Results

| Metric | Start | Day 90 Target |

|---|---|---|

| Category visibility | 10% | 30%+ |

| Comparison wins | 20% | 50%+ |

| AI description accuracy | 60% | 90%+ |

| Review volume | Varies | +50 reviews |

Track these metrics continuously using AICarma to monitor visibility trends and compare against competitors in a real-time Visibility & Sentiment matrix.

FAQ

My product is new—can AI even know about it?

For live RAG-based systems (Perplexity, ChatGPT with Browse), yes—if your site is crawlable and well-structured. For training-data-based knowledge, you need presence in sources included in the next training cut (usually every 3-6 months). Focus on robots.txt and review platforms for quickest impact.

We're in a niche category—does SaaS GEO still matter?

Even more so. In niche categories, there are fewer competitors for AI visibility. You can more easily become the default recommendation. The strategies are the same; the competitive dynamics are often easier.

How do we balance AI optimization with traditional SEO/marketing?

They're mostly complementary. Many GEO tactics (Schema markup, quality content, review presence) benefit SEO too. The main addition is prompt testing and specific AI-focused optimizations. Consider GEO as an evolution of your SEO strategy, not a replacement.

What if AI recommends us with incorrect information?

This happens. Fix it by updating all authoritative sources (entity strategy), adding correct information via Schema, and creating content that corrects the misperception. Over time, new AI training will incorporate corrections.

How do we measure success?

Track AI Visibility Score (percentage of relevant prompts where you appear), comparison win rate, and AI-referred traffic (from referrer analysis). Also monitor branded search volume—often increases when AI visibility improves.