Surviving the Shift: A Strategic Playbook for Managing AI Search Volatility

Last Updated: August 30, 2025

We've all seen it happen: Monday, your brand is the top recommendation in ChatGPT for your category. Tuesday, a model update rolls out, the temperature shifts, and you vanish. Wednesday, you're back—but in third position. Friday, you're gone again.

As we documented in our comprehensive Guide to AI Search Volatility, this instability isn't a bug. It's the fundamental nature of probabilistic systems. AI recommendations are generated through sampling from probability distributions—not retrieved from a fixed index.

Understanding why it happens was step one. This article is step two: building a strategic playbook for surviving, exploiting, and ultimately thriving in a volatile AI search environment.

Table of Contents

- The Cost of Volatility Panic

- Strategy 1: The Portfolio Approach

- Strategy 2: The Owned Data Moat

- Strategy 3: The Corroboration Shield

- Strategy 4: The Trend-Based Reporting Framework

- Strategy 5: Exploiting Volatility Windows

- The Science of AI Instability

- Building an Anti-Fragile Brand

- Industry-Specific Volatility Playbooks

- FAQ

The Cost of Volatility Panic

Before we discuss solutions, let's quantify the problem. Volatility itself doesn't destroy brands. Panic-driven reactions to volatility destroy them.

We've observed teams make these costly mistakes:

| Panic Reaction | Actual Cost | Better Response |

|---|---|---|

| "We dropped in ChatGPT! Rewrite everything!" | $15-30K in content production wasted on noise | Wait 7 days. Check if it's a trend or a blip. |

| "Turn off the AI monitoring—the numbers are too scary." | Lost early warning on genuine drops | Shift from daily snapshots to weekly moving averages |

| "Our competitor overtook us—match their strategy!" | Copying tactics without understanding context | Competitive analysis to understand why they gained |

| "AI is too unpredictable. Let's focus on Google only." | Abandoning a channel growing 300% yearly | Accept volatility as the cost of early-mover advantage |

Research from Kahneman's behavioral economics work (Nobel Prize, 2002) demonstrates that humans are loss-averse: losses feel roughly twice as painful as equivalent gains feel pleasurable. This means a 10-point visibility drop feels catastrophic even if last week brought a 10-point gain. The antidote is systematic, data-driven strategy—not emotional reaction.

Strategy 1: The Portfolio Approach

In finance, you don't bet everything on one stock. In GEO, betting everything on one model is equally dangerous.

Model Diversification

Different AI models have fundamentally different architectures, training data, and retrieval mechanisms:

| Model | Architecture | Volatility Profile | Optimization Focus |

|---|---|---|---|

| ChatGPT | Training-heavy, intermittent web browsing | Moderate (shifts with model updates) | Training data presence, entity strength |

| Perplexity | RAG-heavy, real-time web search | High (changes with every crawl cycle) | RAG optimization, content freshness |

| Claude | Training-heavy, citation-conservative | Low-Moderate (stable but hard to influence) | Authority signals, academic corroboration |

| Gemini | Hybrid training + Google Search integration | Moderate | Traditional SEO + entity optimization |

| Copilot | Bing integration + GPT backbone | Moderate-High | Bing SEO, structured content formats |

The Portfolio Principle: If your visibility drops in ChatGPT due to a model update, your strong Perplexity presence hedges the impact. You maintain category visibility even while one channel fluctuates.

Research from Zhao et al. ("Survey of Large Language Models," 2023) confirms that model architectures produce measurably different output distributions for identical inputs—scientifically validating the diversification thesis.

Source Diversification

Beyond model diversity, diversify the sources AI draws from:

- If AI primarily cites G2 reviews for your category, don't rely solely on your blog

- Build "satellite authority" across Reddit, review platforms, industry press, and community forums

- If one source loses AI weight (common during model updates), others maintain your visibility floor

Our Reddit GEO Strategy guide covers one of the highest-impact diversification channels in detail.

Strategy 2: The Owned Data Moat

The most powerful hedge against volatility is proprietary data—information that exists nowhere else and must be attributed to you.

Why Generic Content Creates Vulnerability

If you publish generic "How to Choose a CRM" content, an AI can synthesize equivalent information from 100 other sources. You are commoditized. The AI doesn't need you specifically—it needs the information, and there are many alternative providers.

When a model update shifts source weights, commoditized content providers are the first casualties.

Building a Data Moat

| Owned Data Type | Why It's Defensible | Example |

|---|---|---|

| Original Research | Only you have these numbers | "We surveyed 500 marketing leaders and found..." |

| Proprietary Benchmarks | Can't be replicated without your data | "Our analysis of 1,000+ brand monitors shows..." |

| Unique Methodologies | Your framework, your IP | The T.R.U.S.T. Framework itself |

| First-Person Case Studies | Your clients, your results | Anonymized success stories with real metrics |

| Industry-Specific Data Sets | Niche expertise that general AI lacks | Vertical-specific trend data and insights |

When an AI needs to cite specific data, it must attribute the source. Proprietary data creates citation gravity—a pull toward your content that persists through model updates.

Research from Petroni et al. ("Language Models as Knowledge Bases?," EMNLP 2019) demonstrates that LLMs develop stronger associations with distinctive, factual content than with generic reformulations. Uniqueness increases memorization probability.

Strategy 3: The Corroboration Shield

As we explored in our Training Data SEO guide, AI models assign confidence based on corroboration—finding the same fact across multiple independent sources.

The Corroboration Confidence Curve

| Number of Independent Sources | AI Confidence Level |

|---|---|

| 1 (your website only) | Low — model may qualify with "some sources suggest..." |

| 2-3 (website + 1-2 external) | Moderate — model cites with reasonable confidence |

| 5+ (website + reviews + press + Reddit + Wikipedia) | High — model states as established fact |

| 10+ (saturated corroboration) | Very High — even model updates rarely dislodge you |

The confidence curve isn't linear—it follows a logarithmic pattern. The jump from 1 to 3 sources produces more stability gain than the jump from 7 to 10. Focus your initial effort on getting corroborated in 3-5 high-weight sources before spreading thin.

This principle was formalized by Dong et al. in their Knowledge Vault research ("Knowledge Vault: A Web-Scale Approach to Probabilistic Knowledge Fusion," KDD 2014), which demonstrated that multi-source fusion dramatically improves knowledge confidence in automated systems.

Practical Corroboration Actions

- Ensure your key claims appear on your website, Wikipedia (if eligible), at least 2 review platforms, and 1+ industry publication

- Build your Entity presence across Wikidata, Crunchbase, LinkedIn Company Page, and Google Knowledge Panel

- Earn genuine mentions in Reddit discussions—not marketing posts, but helpful community contributions

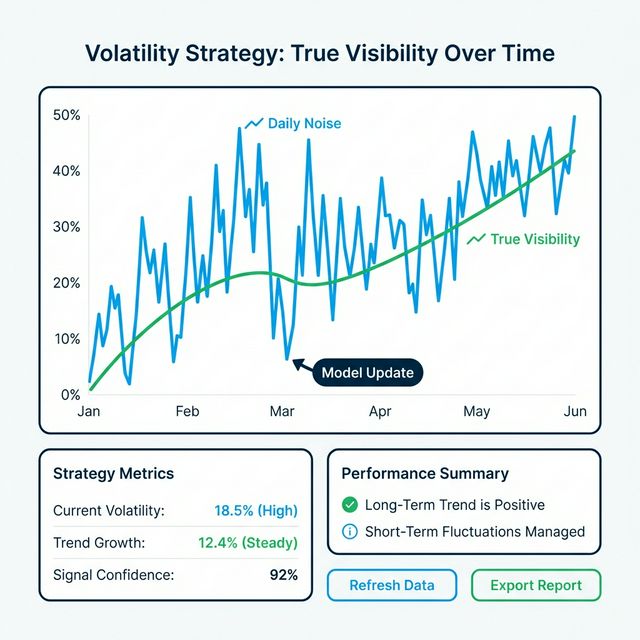

Strategy 4: The Trend-Based Reporting Framework

Perhaps the most immediately actionable strategy: change how you report AI performance to stakeholders.

Why Traditional Reporting Fails

When your CMO asks "What's our ranking in ChatGPT?", the honest answer is complex. But complexity doesn't fly in executive meetings.

Traditional SEO reporting works because the underlying system is relatively stable:

- "We rank #3 for [keyword]" — meaningful, verifiable, stable

- "Our ranking improved from #7 to #4" — clear direction

AI reporting requires a paradigm shift because the underlying system is probabilistic:

- "Our Visibility Rate this week is 52%" — snapshot, incomplete

- "Our 4-week average Visibility is 50%, up from 42% last quarter" — trend, meaningful

The Recommended Executive Dashboard

| Metric | This Period | Previous | 4-Week MA | Trend |

|---|---|---|---|---|

| AI Visibility Rate | 52% | 48% | 50% | ↗️ Improving |

| Sentiment Score | 8.2/10 | 8.0/10 | 8.1/10 | → Stable |

| Cross-Model Coverage | 4/5 models | 3/5 | 3.5/5 | ↗️ Improving |

| Competitor A Visibility | 45% | 47% | 46% | ↘️ Declining |

| Volatility Band | ±8% | ±12% | ±10% | ↗️ Stabilizing |

Notice the key innovation: the Volatility Band (±%) is itself a metric. A shrinking volatility band means your position is hardening—a better result than a higher visibility number with wider swings.

The "Weather Forecast" Analogy

"Your AI visibility is like a weather forecast. If we say there's a 70% chance of rain, and it doesn't rain, did we get it wrong? No—we got it right 30% of the time, which was always possible. Similarly, if our AI Visibility Rate is 50%, we expect to appear in about half of relevant queries. Some days will be 40%, some 60%. The 4-week average tells us the true climate."

This framing, borrowed from Tetlock's Superforecasting (Crown, 2015), helps executives develop the right mental model for probabilistic metrics.

Strategy 5: Exploiting Volatility Windows

Here's the counterintuitive insight: volatility isn't just a threat. It's an opportunity.

The "Open Window" Theory

If AI recommendations were perfectly stable—like the old Google Page One—top incumbents would be permanently locked in. New entrants would have no path to visibility.

But volatility creates windows of opportunity:

| Volatility Event | What It Means | Your Move |

|---|---|---|

| Model update shuffles rankings | Top positions are temporarily "unlocked" | Deploy your strongest content immediately |

| Competitor's visibility drops | AI is "forgetting" them temporarily | Increase your signal on their weakened queries |

| New query category emerges | No incumbent has established authority | Create authoritative content fast for first-mover advantage |

| RAG source rotation | The AI is looking at different sources | Ensure you're present across more sources |

Timing Your Moves

Major model updates are often announced (or detected by the community within hours). When an update drops:

- Monitor immediately — AICarma users receive automated alerts when their metrics shift significantly

- Assess within 48 hours — Is the shift beneficial (opportunity) or detrimental (threat)?

- Act within 1 week — Deploy new content, update existing pages, strengthen weaker pillars

- Measure within 2 weeks — Did the response stabilize your position?

This cadence is faster than traditional SEO's quarterly reactions—and it needs to be, because the environment changes faster.

The Science of AI Instability

For the technically curious, here's why AI results are fundamentally volatile.

Temperature and Nucleus Sampling

LLMs generate text by predicting the next most likely token. The temperature parameter controls how "creative" this prediction is:

- Temperature 0.0: Always picks the most probable token (deterministic, minimal variety)

- Temperature 0.7: Samples from a wider range of probable tokens (balanced)

- Temperature 1.0+: Samples broadly, including lower-probability tokens (creative, high variance)

As documented by Holtzman et al. ("The Curious Case of Neural Text Degeneration," ICLR 2020), even moderate temperature settings produce meaningful output variation across repeated runs with identical inputs.

You can't control the temperature—only the AI provider can. But you can build such a strong probability mass around your brand that even high-temperature sampling frequently selects you.

Model Update Frequency

Chen et al. ("How is ChatGPT's Behavior Changing Over Time?," 2023) tracked GPT-3.5 and GPT-4 behavior over months and found significant behavioral drift—even between ostensibly identical model versions. This "silent drift" means your visibility can shift without any announced update.

The implication: continuous monitoring isn't optional. It's the only way to detect silent changes before they compound.

Building an Anti-Fragile Brand

The ultimate goal isn't just surviving volatility—it's becoming anti-fragile (Taleb, Antifragile, Random House, 2012): a brand that actually benefits from instability.

Anti-fragile brands share these traits:

- Deep Entity Presence: Their Knowledge Graph profile is so strong that even major model updates preserve their associations

- Multi-Source Corroboration: They're cited on 10+ independent sources, making single-source disruption irrelevant

- Proprietary Data: They own unique information that AI must attribute to them

- Diversified Model Coverage: They appear across all major models, not just one

- Rapid Response Capability: They detect changes within 48 hours and deploy responses within a week (GEO Flywheel)

Industry-Specific Volatility Playbooks

Different industries face different volatility profiles. Tailor your strategy accordingly:

Low Volatility: Healthcare & Finance

Why: AI applies strict YMYL safety filters. Recommendations are conservative and change slowly. Strategy: Invest heavily in Trust pillar. Once established, positions are durable. But entry is deliberately hard.

Medium Volatility: SaaS & B2B

Why: Multiple comparable options, with quality documentation and reviews. Strategy: Differentiate via proprietary benchmarks. SaaS GEO Playbook covers this in depth.

High Volatility: E-commerce & Local

Why: Pricing, availability, and reviews change constantly. Strategy: Speed and freshness matter more. Maintain current reviews and real-time structured data.

FAQ

Should I check my AI visibility daily?

No. Daily checks create anxiety without information. Daily fluctuations are mostly noise—an effect of temperature sampling and retrieval variance. Check weekly moving averages. Exception: if your alerting system flags a drop exceeding 30%, investigate immediately.

My competitor's visibility spiked after a model update. Should I panic?

Not immediately. Model updates often create temporary reshuffling. Give it 2 weeks before concluding the change is permanent. During that window, analyze why they gained—did they publish new content? Did they earn a new authoritative citation? Then respond strategically, not reactively.

How much volatility is "normal"?

For category queries in competitive markets, expect ±15-20% week-over-week variation as a baseline. If your volatility is consistently below ±10%, your position is strong. If it's above ±25%, your position is fragile and needs corroboration work.

Can I use volatility data to predict model updates?

Sometimes. When volatility spikes simultaneously across multiple brands in the same category—but there's no obvious content change from any of them—it often indicates a model update or retrieval system change. We've observed this pattern reliably across AICarma's monitoring data.

What's the single most important thing for volatility resilience?

Entity strength. A strong, well-corroborated brand entity survives model updates better than anything else. Entities are the most persistent unit of knowledge in LLMs—they're embedded in the weights, corroborated across sources, and resistant to retrieval noise.