What Is llms.txt? The New Standard for AI-First Web Communication

Last Updated: May 30, 2025

In 1994, a simple 300-byte text file called robots.txt was created to help webmasters control how search engines crawled their sites. Three decades later, that humble standard remains one of the most important files on every website.

Now, a new file is emerging to serve a similar purpose for the AI era: llms.txt.

While robots.txt tells AI crawlers where they can go, llms.txt tells AI what they should know about your brand. Think of it as giving an AI assistant a cheat sheet before a job interview about your company—you're curating the exact information you want it to have.

This isn't a hypothetical future standard. It's being proposed, adopted, and refined right now by AI companies and forward-thinking organizations. And implementing it today could give you a significant edge in Generative Engine Optimization.

Table of Contents

- The Problem llms.txt Solves

- What Is llms.txt?

- The Format and Specification

- Why Your Brand Needs llms.txt

- How to Create Your llms.txt

- llms.txt vs. robots.txt: Understanding the Difference

- Best Practices for llms.txt Content

- Who's Already Using llms.txt

- Implementation Checklist

- FAQ

The Problem llms.txt Solves

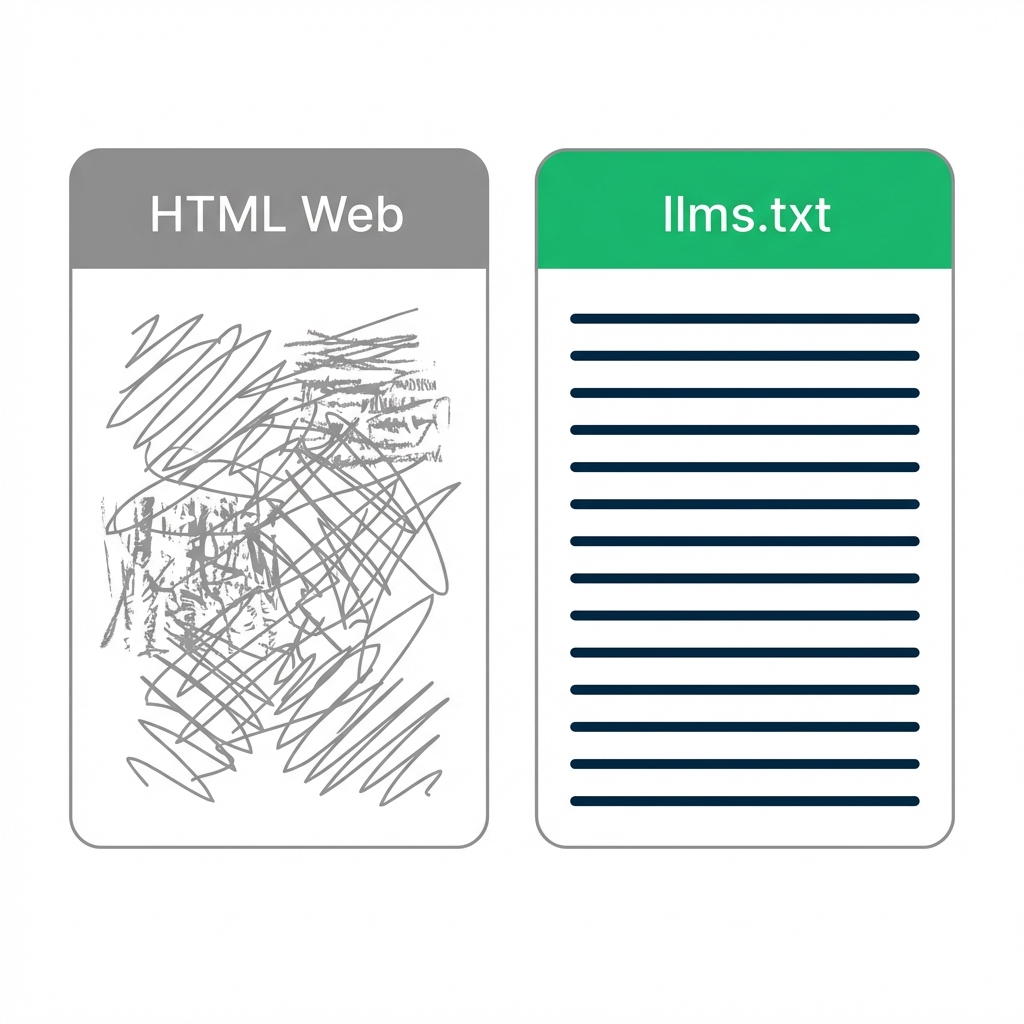

When an AI agent visits your website to answer a user's question, it faces a fundamental challenge: noise.

Your homepage is full of navigation menus, footer links, cookie consent banners, promotional sliders, and marketing copy. Buried somewhere in that noise is the actual information the AI needs: What do you do? What are your products? How much do they cost?

The AI has limited "attention" (context window space). It can't process everything. So it tries to extract the most relevant chunks—but it often grabs the wrong things or misses crucial details.

The Signal-to-Noise Problem

| Content Type | Signal (Useful) | Noise (Wasted context) |

|---|---|---|

| Homepage | Company description, products | Navigation, promos, footers |

| Product page | Features, pricing, specs | Social buttons, related products |

| Blog post | Core insights | Ads, pop-ups, author bio |

| About page | Company story | Team photos, culture fluff |

For every useful sentence, there might be 5-10 sentences of noise. And with typical context windows of 32K-128K tokens, noise consumption matters.

llms.txt Solves This

llms.txt provides a curated, token-efficient manifest of the information AI should prioritize. It's like giving the AI a table of contents with direct links to the cleanest, most important resources.

What Is llms.txt?

llms.txt is a markdown file placed at the root of your domain (e.g., yourdomain.com/llms.txt) that serves as a machine-readable manifest for AI agents. It tells AI systems:

- What your company does (in a concise, quotable summary)

- Where the important content is (direct links to key resources)

- What to prioritize (ordered sections by importance)

The Vision

Imagine every AI interaction with your brand starts by the agent reading your llms.txt. Before it crawls your messy homepage, it has:

- A clean 100-word description of your company

- Links to your core product pages

- Links to pricing information

- Links to your most important documentation

The AI can then use its limited context window to retrieve the actual content from those curated pages, rather than wasting tokens on navigation menus and promotional banners.

Visualizing Data Signal

The Format and Specification

llms.txt uses a simple markdown format, making it human-readable and machine-parseable:

Basic Structure

# [Company Name]

A brief description of your company in 1-3 sentences. This should be

your elevator pitch—the core of what an AI should understand about you.

## Products

- [Product Name 1](/products/product-1/): Brief description

- [Product Name 2](/products/product-2/): Brief description

## Documentation

- [Getting Started](/docs/getting-started.md)

- [API Reference](/docs/api.md)

- [FAQ](/faq.md)

## Company Information

- [About Us](/about.md)

- [Pricing](/pricing.md)

- [Contact](/contact.md)

## Optional: Additional Resources

- [Blog](/blog/)

- [Case Studies](/case-studies/)

Key Format Rules

| Element | Format | Purpose |

|---|---|---|

| Title | # Company Name |

Top-level identifier |

| Description | Plain text paragraph | Company summary (keep under 150 words) |

| Sections | ## Section Name |

Organizes content types |

| Links | [Text](/path/) |

Points to key resources |

| Link Descriptions | After colon | Brief context for the link |

Extension: llms-full.txt

Some proposals include a companion file llms-full.txt that contains the actual content in markdown format, eliminating the need for the AI to follow links:

# AICarma

AICarma is an AI visibility monitoring platform that helps brands track

and optimize their presence in ChatGPT, Claude, Gemini, and other LLM

responses. Founded in 2023, we serve over 500 B2B companies.

## Full Content

### Pricing

AICarma offers three plans:

- Starter: $99/month - 10 tracked queries, 3 AI models

- Pro: $299/month - 50 tracked queries, all AI models

- Enterprise: Custom pricing - Unlimited queries, API access

[Full content continues...]

Why Your Brand Needs llms.txt

Reason 1: Control the Narrative

Without llms.txt, AI systems piece together their understanding of your brand from whatever they can find—which might be outdated blog posts, random press mentions, or even competitor comparison pages.

With llms.txt, you direct AI to the canonical, authoritative sources you choose.

Reason 2: Reduce Hallucination Risk

When AI has to infer information from noisy web pages, it sometimes guesses wrong. By providing clean, structured information, you reduce the risk of AI telling users wrong things about your products.

Reason 3: Improve RAG Performance

When AI systems use Retrieval-Augmented Generation, they need to retrieve relevant content. llms.txt helps by:

- Pointing to clean markdown versions of key content

- Reducing token waste on navigation/UI elements

- Providing explicit priority signals

Reason 4: Future-Proof Your AI Presence

AI capabilities are evolving rapidly. Standards like llms.txt may become as important as robots.txt. Early adoption means:

- Being indexed by AI systems before competitors

- Establishing best practices before they're required

- Building institutional knowledge about AI optimization

How to Create Your llms.txt

Step 1: Audit Your Critical Content

Identify the pages that AI most needs to know about:

| Priority | Content Type | Why It Matters |

|---|---|---|

| Critical | Product/service pages | Core offering definition |

| Critical | Pricing page | Agents need this for comparisons |

| High | FAQ/Help pages | Direct Q&A for AI to cite |

| High | About/Company page | Entity information |

| Medium | Documentation | Technical details |

| Medium | Key blog posts | Thought leadership |

Step 2: Create Clean Markdown Versions

For maximum effectiveness, create stripped-down markdown versions of key pages:

| Original | Clean Version | Purpose |

|---|---|---|

/pricing/ |

/docs/pricing.md |

Remove nav, just pricing data |

/about/ |

/docs/about-company.md |

Remove fluff, just facts |

/products/x/ |

/docs/product-x.md |

Specs and features only |

These clean versions become what you link to in llms.txt.

Step 3: Write Your llms.txt

# YourCompany

YourCompany is a [specific category] company that provides [core offering]

to [target audience]. Founded in [year], we serve [number] customers

including [notable names or segments].

## Core Documentation

- [Company Overview](/docs/about.md): Full company background and mission

- [Product Catalog](/docs/products.md): Complete list of offerings

- [Pricing](/docs/pricing.md): Detailed pricing for all plans

## Products

- [Product A](/docs/product-a.md): Brief 1-line description

- [Product B](/docs/product-b.md): Brief 1-line description

## Resources

- [FAQ](/docs/faq.md): Common questions answered

- [API Documentation](/docs/api.md): Technical integration details

- [Case Studies](/docs/case-studies.md): Customer success stories

Step 4: Deploy

Upload the file to your domain root:

yourdomain.com/llms.txt- Optionally:

yourdomain.com/llms-full.txt

Ensure it's:

- Accessible without authentication

- Allowed in robots.txt

- UTF-8 encoded

- Valid markdown

llms.txt vs. robots.txt: Understanding the Difference

These files serve complementary purposes:

| Aspect | robots.txt | llms.txt |

|---|---|---|

| Purpose | Access control | Information curation |

| Tells AI | Where it CAN go | What it SHOULD know |

| Format | Specific directive syntax | Markdown |

| Mandatory? | Expected by all crawlers | Emerging standard |

| Controls | Crawl behavior | Content prioritization |

| Scope | Entire site structure | Key content subset |

They work together:

- robots.txt ensures AI can access the pages you want it to see

- llms.txt tells AI which of those pages are most important

- Schema Markup on those pages provides structured facts

Best Practices for llms.txt Content

Description Best Practices

| Do | Don't |

|---|---|

| "AICarma monitors brand visibility across ChatGPT, Claude, and Gemini" | "We're a cutting-edge AI company revolutionizing digital marketing" |

| "Pricing starts at $99/month for 10 tracked queries" | "Competitive pricing available" |

| "Founded in 2023, serving 500+ B2B SaaS companies" | "Trusted by leading companies worldwide" |

Link Organization

Order by importance: AI systems may use link order as a priority signal. Put your most important resources first.

Use descriptive link text: Instead of "Learn more," use "Complete pricing breakdown including enterprise plans."

Link to markdown, not HTML: If possible, link to clean .md files rather than full HTML pages full of navigation.

Content in llms.txt vs. Linked Files

Keep llms.txt itself concise (under 500 words). Detailed information should live in the linked files. Think of llms.txt as a catalog cover, not the full catalog.

Who's Already Using llms.txt

While llms.txt is still emerging, early adoption is happening:

Tech Companies

Several AI and developer-focused companies have implemented llms.txt or similar manifests:

- Various open-source projects

- Developer documentation sites

- API-first companies

Documentation Platforms

Sites built on platforms like Notion, GitBook, and ReadTheDocs are natural fits for llms.txt because they already have clean markdown content.

Early Adopter Signals

If you search for site:domain.com llms.txt on various tech companies, you'll find early examples. The standard is evolving, so implementations vary.

Implementation Checklist

Use this checklist to implement llms.txt:

Pre-Implementation

- [ ] Audit: List your 10-20 most important pages

- [ ] Create: Clean markdown versions of critical content

- [ ] Write: Company description in 2-3 factual sentences

- [ ] Prioritize: Order content by importance

Implementation

- [ ] Create llms.txt file in markdown format

- [ ] Upload to domain root (

/llms.txt) - [ ] Verify robots.txt allows access to the file

- [ ] Test: Ensure all linked resources are accessible

- [ ] Optional: Create llms-full.txt with inline content

Post-Implementation

- [ ] Monitor: Check AI responses for improved accuracy

- [ ] Update: Revise when products/pricing change

- [ ] Expand: Add new important resources as created

- [ ] Test: Periodically verify file is accessible

Validation Questions

- [ ] Can I find /llms.txt from any browser without login?

- [ ] Do all links in llms.txt resolve correctly?

- [ ] Is the markdown valid and well-formatted?

- [ ] Is the description factual, not marketing fluff?

FAQ

Is llms.txt an official standard?

Not yet. It's a de facto community-driven proposal that's gaining traction among AI developers and forward-thinking organizations. It's not yet an IETF standard, but practical adoption is growing. Even without official standardization, having clean, curated information for AI is valuable.

Will ChatGPT/Claude/Gemini actually read my llms.txt?

Major AI platforms haven't officially announced llms.txt support. However, the principle remains valuable: having clean, markdown-formatted versions of your key content makes it easier for any AI system to understand you, whether it reads llms.txt explicitly or discovers your clean docs through normal crawling.

Can I password-protect llms.txt?

No. The file is meant to be publicly accessible. Never put sensitive information in llms.txt. It should only contain information you want AI systems to know about publicly.

How often should I update llms.txt?

Update whenever significant changes occur: new products, pricing changes, major documentation updates. A good rule is to review quarterly or whenever you'd update your pitch deck.

Does llms.txt replace Schema Markup?

No. They serve different purposes. Schema Markup (JSON-LD) provides structured facts about specific pages. llms.txt provides a curated directory of important resources. Use both: Schema on every page, llms.txt at domain root.

What if I have multiple brands or products?

You can organize llms.txt with sections for each brand/product, or use separate subdomains with their own llms.txt files. For conglomerates, consider a hierarchy: main llms.txt linking to brand-specific manifests.