Google's AI Overviews Can Scam Your Customers: The New Face of Invisible Brand Syndrome

Last Updated: February 15, 2026

There is a quiet crisis happening in the search results for major brands, and it has nothing to do with traditional SEO rankings.

It happens in the split second before a user clicks. It happens in the "zero-click" space that Google now dominates. And for many brands, it is the most damaging manifestation of Invisible Brand Syndrome we have ever seen.

The crisis is this: Google's AI Overviews are hallucinating fake customer support numbers for legitimate brands.

This isn't just a "user safety" issue or a "tech glitch." For a B2B enterprise or a high-volume B2C brand, this is a Knowledge Graph hijacking. It is a direct assault on the entity relationships that define your brand in the age of AI.

When an AI search engine—whether it's Google Gemini, ChatGPT, or Perplexity—confidently presents a scammer's phone number as your official support line, the damage to your reputation is immediate and often irreversible.

This guide explores the mechanics of this new threat, why your "classic" reputation management tools are failing to detect it, and how to use Entity SEO to secure your brand's digital identity in 2026.

Table of Contents

- The Anatomy of an AI Overview Scam

- Why This Is "Invisible Brand Syndrome" on Steroids

- The Mechanism: How Black Box Algorithms Ingest Spam

- The Entity SEO Defense Strategy

- How AICarma Detects Hallucinations

- FAQ

The Anatomy of an AI Overview Scam

To understand the threat, we must look beyond the anecdote and analyze the system failure.

In a traditional search world (pre-2023), if a scammer wanted to hijack your customer support traffic, they had to buy Google Ads (expensive and heavily policed) or rank an organic page (difficult against a high-authority brand domain).

In the Answer Engine era, the barrier to entry has collapsed.

The "Hallucination Injection"

Recent reports from Wired, The Washington Post, and our own forensic analysis at AICarma have identified a recurring pattern:

- The User Query: A high-intent, distress-driven query like "Delta Airlines support phone number" or "Chase bank fraud line."

- The AI Synthesis: Instead of a list of blue links, Google's AI Overview generates a confident, singular answer card.

- The Injection: Embedded within this "verified" box is a phone number that belongs not to the brand, but to a call center in a scam jurisdiction.

- The Conversion: Because the answer comes from Google's "omniscient" AI, the user trusts it implicitly. They dial, they are defrauded, and they blame the brand.

This is not a "phishing" attack in the traditional sense. The user didn't click a suspicious link. They didn't open a spam email. They trusted the infrastructure of the internet itself, and the infrastructure lied to them about who you are.

Why This Is "Invisible Brand Syndrome" on Steroids

We define Invisible Brand Syndrome as the state where AI models either don't know you exist, or confidently hallucinate incorrect facts about you.

Usually, we talk about this in the context of lost revenue—AI recommending a competitor because it doesn't understand your unique value proposition. But the "Scam Injection" variation is far more dangerous.

Trust Erosion at Scale

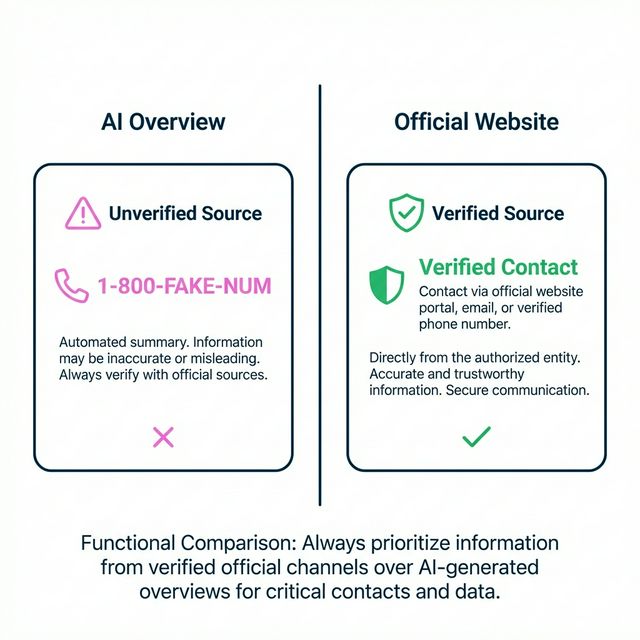

If a user clicks a blue link to a spam site, they might notice the URL is wrong. They might see the design is off. They have visual cues to warn them.

But an AI Overview strips away those context cues. It presents data as fact, stripped of its source provenance.

- No URL to check.

- No design to critique.

- No domain authority to evaluate.

It is pure information, presented with the authority of Google. When that information is a scam number, the user's brain registers the betrayal as a failure of the brand, not the search engine. "Why did Chase give me a fake number?" — not "Why did Google scrape a fake number?"

The "Black Box" Reporting Problem

Traditional brand monitoring tools (Brand24, Mention, Google Alerts) scrape the web. They look for keywords on forums, news sites, and social media.

They cannot scrape the AI.

Because AI Overviews are generated dynamically (and often nondeterministically) for each user, there is no static URL to crawl. A scam number might appear for 20% of users in New York between 2PM and 4PM, and then vanish.

This creates a terrifying blind spot for CMOs and CISOs: Your brand is being hijacked in the search results, and your dashboard shows all systems green.

The Mechanism: How Black Box Algorithms Ingest Spam

How does a trillion-dollar company like Google get tricked by low-level SEO spam? The answer lies in the shift from Index Retrieval to Probabilistic Synthesis.

The "Data Void" Vulnerability

AI models are voracious. They need to answer every query, even when high-quality data is sparse. Scammers exploit "Data Voids"—queries where official documentation is complex, buried in PDFs, or hard for a bot to parse.

If your official "Contact Us" page is a complex JavaScript web app protected by CAPTCHAs (to stop scrapers), you have ironically hidden your truth from the good bots too.

The "Parasite SEO" Tactic

Scammers plant fake numbers on high-authority domains that allow user-generated content (Reddit, Quora, LinkedIn Pulse, community forums).

- They create a post: "Here is the fast direct line for [Brand] support."

- Google's AI, hungry for "fresh" and "human" content, scrapes this post.

- The LLM (Large Language Model) sees the number associated with your Brand Entity in a high-trust context (e.g., Reddit).

- It ingests the connection:

[Brand Entity] --(hasSupportNumber)--> [Fake Number].

Start treating your Knowledge Graph as a security perimeter. If you don't define your entity attributes clearly, someone else will.

The Entity SEO Defense Strategy

You cannot "patch" Google. But you can immunize your brand against these injections by mastering Entity SEO.

The goal is to make your official data so accessible, structured, and authoritative that no probabilistic model would ever choose a Reddit thread over your own signal.

1. Structure Your Truth with Schema.org

Your homepage and contact pages must scream their identity in a language machines understand. We are not talking about basic meta tags. We mean robust Organization and ContactPoint Schema.

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "Organization",

"name": "AICarma",

"url": "https://www.aicarma.com",

"contactPoint": {

"@type": "ContactPoint",

"telephone": "+1-800-555-0199",

"contactType": "customer service",

"areaServed": "US",

"availableLanguage": ["English", "Spanish"]

}

}

</script>

- Action: Audit your Schema markup today. Is your support number explicitly defined? Is it easy for a bot to parse?

2. Robot Control Policy

Review your Robots.txt Strategy. Are you blocking the very bots that need to verify your identity?

- Blocking

Google-ExtendedorGPTBotmight feel like a privacy win, but it prevents these models from re-verifying your official phone numbers during their training runs. - Recommendation: Explicitly

Allowyour "About" and "Contact" pages for all major AI user-agents. You want them to over-index on your truth.

3. The "llms.txt" Standard

Adopt the emerging llms.txt standard. Create a simple, text-only file at /llms.txt that explicitly lists your critical entity facts—support numbers, official domains, and social handles—in a format optimized for RAG (Retrieval-Augmented Generation).

How AICarma Detects Hallucinations

Manual checking is impossible. You cannot Google yourself 10,000 times a day with different IP addresses to catch a fleeting hallucination.

This is why we built the AI Visibility Score.

At AICarma, we don't just track if you "rank." We perform Adversarial Brand Testing. Our platform actively interrogates AI models (Gemini, ChatGPT, Claude, Perplexity) with thousands of permutations of customer intent queries:

- "How do I contact [Brand]?"

- "What is [Brand]'s refund policy?"

- "Is [Brand] legitimate?"

We analyze the Share of Model—the percentage of time the AI provides the correct, verified answer versus a hallucination or a competitor's data.

The "Hallucination Alert"

When our system detects that Gemini is serving a phone number for your brand that does not match your verified entity profile, we trigger a critical alert.

- Detection: "Gemini Pro is citing +1-555-0123 as your support line."

- Verification: "This number is not in your authorized list."

- Action: You get the evidence needed to file a specific takedown request with Google (using their "Report AI Overview" tool) and the data to reinforce your Entity SEO strategy.

Conclusion: Security Is Now Semantic

The era of "keywords" is over. We are in the era of Entities. Your brand is no longer just a website; it is a data object in the latent space of a neural network.

If that object is undefined, it is vulnerable.

Don't let an algorithm write your brand's story—or its support number. Take control of your entity.

FAQ

Can I opt out of AI Overviews to stop this? No, you cannot opt out of appearing in AI Overviews (unless you de-index your site entirely, which is suicide). You can only influence the data they use through better Entity SEO.

How do I report a fake number in an AI Overview? Google provides a "Feedback" link at the bottom of every AI Overview. However, for faster action, having a documented history of the hallucination (provided by tools like AICarma) helps in escalating the request via Google Search Console support channels.

Does Schema markup guarantee accuracy? It is not a guarantee, but it is a strong signal. Schema provides "explicit" data to counter the "probabilistic" data of the LLM. It raises the confidence threshold required for the model to override your data with a random forum post.

Why did my social listening tool miss this? Social listening tools scrape the surface web (HTML pages). They do not render AI generations. A scam number generated dynamically by an LLM leaves no footprint on the surface web for a traditional crawler to find. You need Generative Monitoring.