The Death of the Survey: Why Enterprise is Abandoning Traditional Research for AI Monitoring

Last Updated: October 15, 2025

A quiet revolution is transforming how large enterprises understand their markets. For decades, traditional surveys and focus groups formed the bedrock of strategic planning. Today, that foundation is cracking—and the world's largest companies are racing to replace it.

Table of Contents

- The Crisis of Traditional Research

- Survey Fatigue: The Data Quality Collapse

- The Speed Problem: When Insights Arrive Too Late

- The Rise of AI Model Polling

- Real-World Impact: The Automotive Example

- Why Enterprise Demand Is Surging

- FAQ

The Crisis of Traditional Research

Consider a scenario playing out in boardrooms across the Fortune 500: A major automotive manufacturer prepares to launch a new crossover model. In the traditional paradigm, gathering market feedback resembles steering an ocean liner—slow, expensive, and frustratingly imprecise.

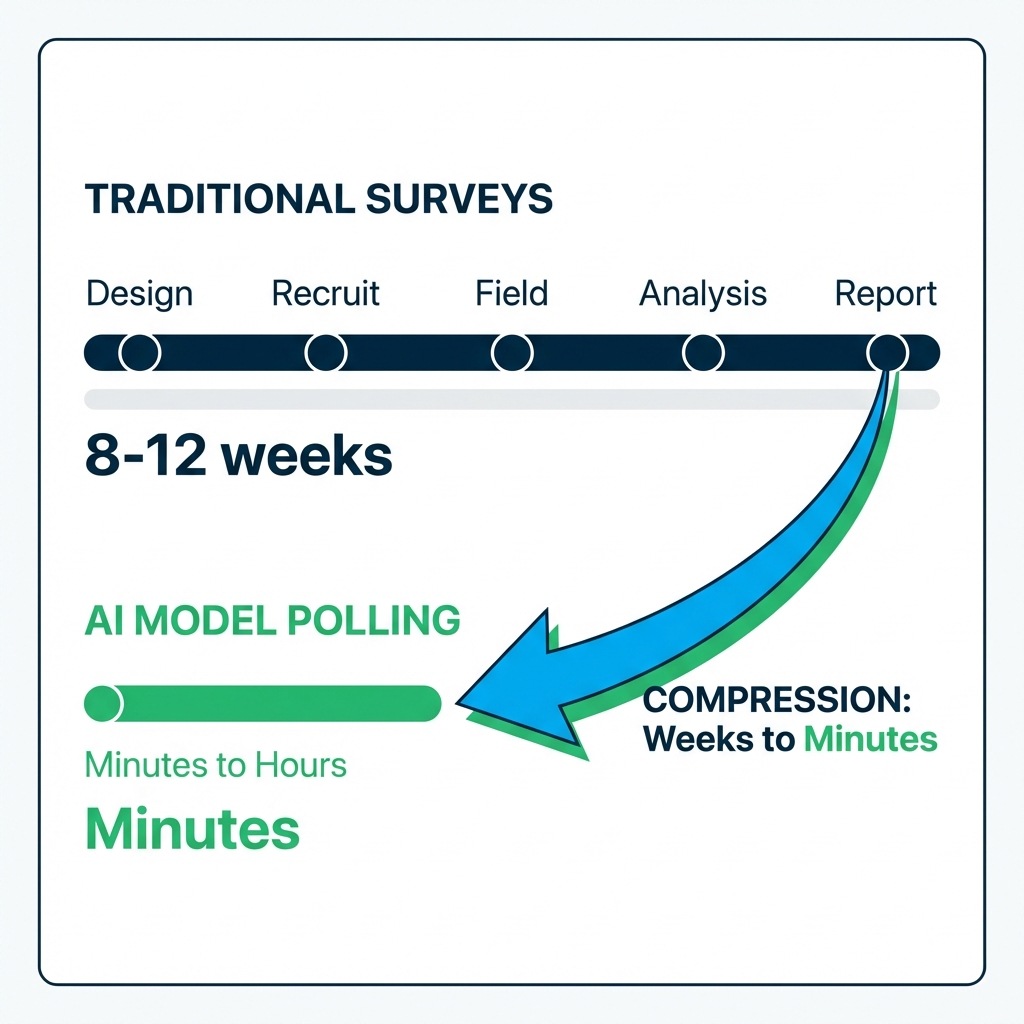

The research process might look like this:

- Week 1-2: Design questionnaire, negotiate with agency

- Week 3-4: Recruit representative sample

- Week 5-8: Field research, collect responses

- Week 9-10: Data cleaning, reject bad responses

- Week 11-12: Analysis and report delivery

By the time insights arrive, the market has already shifted. Competitor campaigns have launched. Consumer sentiment has evolved. The carefully gathered data describes a world that no longer exists.

This isn't a hypothetical—it's the daily reality for enterprise research teams.

Survey Fatigue: The Data Quality Collapse

The survey industry faces what researchers call "attention inflation." Every brand interaction—buying coffee, calling support, visiting a website—ends with a request to "rate your experience." This relentless bombardment has triggered Survey Fatigue, a phenomenon where response quality deteriorates as request volume increases.

Research from longitudinal panels shows clear correlation: the more frequently respondents are contacted, the lower the quality and quantity of their responses. People answer mechanically, selecting random options to close popup windows, or ignore requests entirely.

The Deliverability Crisis

The problem compounds with technical barriers. In 2025, email platforms like Gmail and Outlook are aggressively filtering survey invitations. Deliverability rates for research platforms have dropped 19-27%, meaning companies physically lose access to their audience regardless of budget.

Selection Bias and Data Fraud

When responses do arrive, they suffer from critical distortions:

- Selection Bias: Only brand advocates and angry critics respond; the "silent majority" disappears

- Bad Data: Bots and dishonest panelists generate random responses for incentives

- Quality Decay: Analysts discard up to 20% of records due to obvious fraud

The result: expensive research that produces unreliable conclusions.

The Speed Problem: When Insights Arrive Too Late

Return to our automotive example. In today's dynamic economy, an 8-12 week research cycle represents an eternity. Trends can emerge, peak, and fade within that window. Decisions based on stale data aren't just ineffective—they're dangerous.

When a competitor monitors real-time social signals and responds to user complaints within 24 hours, the company waiting for quarterly reports inevitably loses market share.

The data arrives, as practitioners ruefully note, "late, distorted, and non-objective."

The Rise of AI Model Polling

The answer to this crisis is emerging from an unexpected direction: using Large Language Models as proxy respondents. This approach—often called Model Polling or "synthetic research"—operates on a revolutionary premise: modern LLMs trained on the entire public internet contain a compressed model of human society itself.

How Model Polling Works

Instead of recruiting 500 people across specific demographics and paying them to complete surveys, enterprise companies now create synthetic personas. Using specialized system prompts, a single model (like GPT-4 or Claude) can simulate diverse demographic profiles:

- "You are a 35-year-old suburban mother concerned about safety"

- "You are a 20-year-old tech student seeking budget-friendly style"

The advantages over traditional research are fundamental:

| Factor | Traditional Surveys | AI Model Polling |

|---|---|---|

| Speed | 8-12 weeks | Minutes to hours |

| Cost | Full budget | 10-25% of traditional |

| Scale | Hundreds of respondents | Millions of simulations |

| Bias | Observer effect, social pressure | None |

| Sensitive topics | Reluctance, dishonesty | Full transparency |

Research shows 87% of teams using synthetic data express satisfaction with results, noting high correlation with actual market behavior.

Real-World Impact: The Automotive Example

Let's return to our automotive manufacturer. In the new paradigm, the product team no longer waits months to understand customer reaction to a feature decision.

Traditional approach: "Should we include heated steering as standard?"

- 10-week study, $200,000 budget, delayed decision

AI Monitoring approach: Query multiple AI models connected to real-time data streams from Reddit, automotive forums, and social media

- Results in hours, continuous monitoring, immediate iteration

The models aren't accessing two-year-old training data. Through architectures like RAG (Retrieval-Augmented Generation), they connect to vector databases populated with posts from the last 24 hours. The AI synthesizes market reaction in real-time.

This is why enterprise interest in AI monitoring has exploded. At AICarma, we've observed that enterprise inquiries now exceed SMB interest in our organic acquisition—and the challenges they bring are far more sophisticated, requiring deep expertise in multi-model architectures and entity monitoring.

Why Enterprise Demand Is Surging

The shift from surveys to AI monitoring isn't driven by curiosity—it's driven by competitive necessity:

- Speed Requirements: Markets move in days, not quarters

- Cost Pressure: Traditional research budgets face scrutiny

- Quality Concerns: Survey data reliability continues declining

- AI Integration: Enterprises embedding AI across operations

- Reputation Management: Need to monitor AI-generated brand perceptions in real-time

For companies accustomed to managing visibility across multiple AI models, the transition from passive research to active monitoring feels natural. For those still relying on quarterly reports, it feels like the ground shifting beneath their feet.

FAQ

Are AI-generated insights as reliable as human surveys?

For many use cases, yes—and often more so. AI eliminates observer effects, social desirability bias, and survey fatigue. Research shows 87% satisfaction rates among enterprise teams using synthetic respondents. However, AI works best when combined with human validation for emotionally nuanced or culturally sensitive topics.

How quickly can enterprises implement AI monitoring?

Specialized platforms enable deployment in weeks rather than months. The challenge isn't speed—it's choosing the right approach and integrating properly with existing research workflows.

Does this mean traditional research is obsolete?

Not entirely. Qualitative deep-dives, ethnographic research, and certain longitudinal studies retain value. But for fast-cycle tactical insights, AI monitoring is rapidly becoming the enterprise standard.

What infrastructure is required?

Enterprise AI monitoring requires proper technical foundations—access to multiple LLM providers, real-time data ingestion capabilities, and sophisticated orchestration layers. Building this infrastructure in-house is possible but increasingly complex.

The transition from survey-based research to AI-powered monitoring represents one of the most significant shifts in corporate intelligence since the internet itself. Companies that master this capability gain real-time market visibility. Those that don't risk making decisions based on data that describes yesterday's world—while competitors see tomorrow.