The Economics of Enterprise AI Monitoring: Build vs. Buy and the Hidden Cost Spiral

Last Updated: November 18, 2025

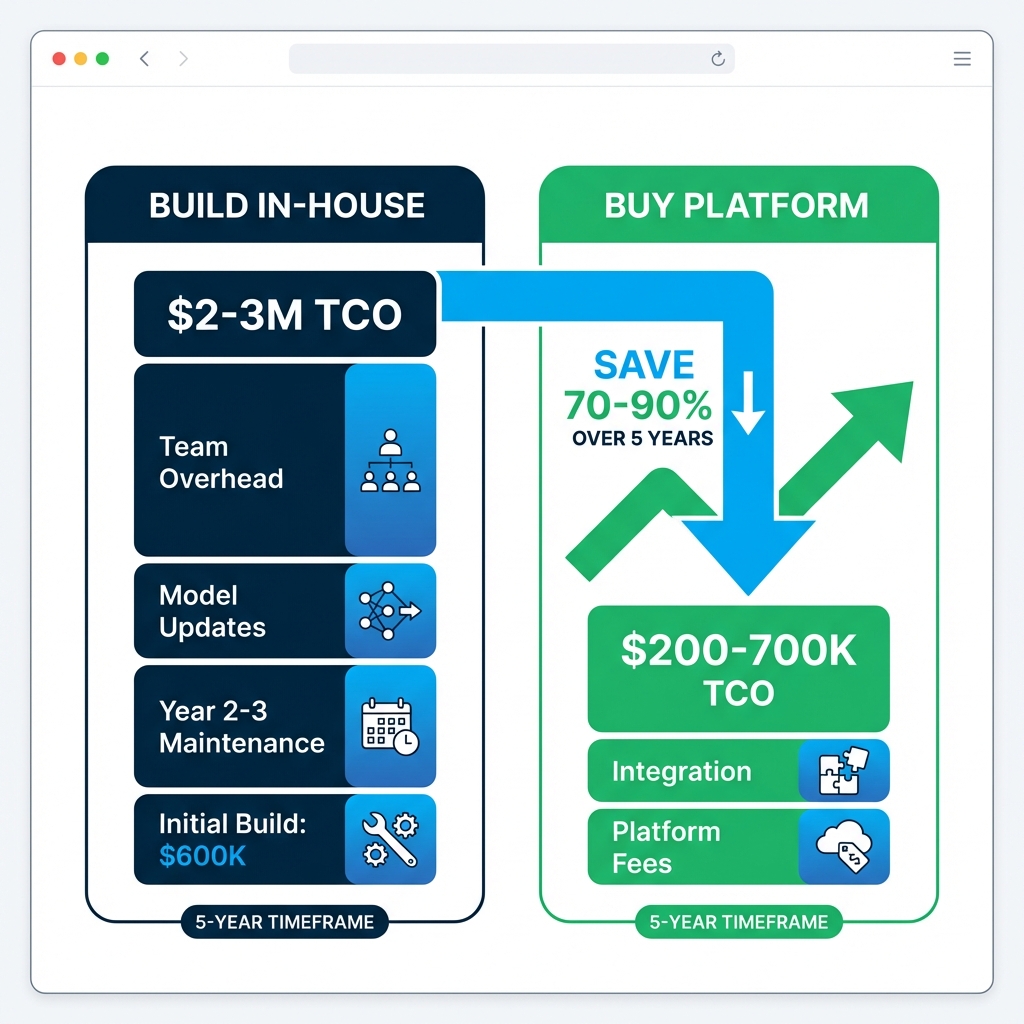

The CFO's approval seemed straightforward: $600,000 to build an internal AI monitoring system. Engineering estimated 18 months to production. Leadership wanted full control over the technology stack.

Three years later, the true cost had ballooned to $2.4 million—and the system still couldn't match commercial alternatives.

This story repeats across enterprise IT with alarming frequency. AI monitoring infrastructure carries hidden economics that catch even sophisticated organizations off guard.

Table of Contents

- The New Economics of AI Infrastructure

- The Inference Cost Trap

- Build vs. Buy: The Real Numbers

- Hidden Costs of Building In-House

- The Platform Advantage

- Making the Decision

- FAQ

The New Economics of AI Infrastructure

Traditional enterprise software follows familiar economics: large upfront development costs (CapEx), modest ongoing maintenance (OpEx), and predictable scaling. AI infrastructure inverts this model.

Training vs. Inference: Where the Money Goes

In AI economics, two cost categories dominate:

Training costs: The one-time expense of creating or fine-tuning a model. For enterprises using commercial LLMs, this is largely the provider's problem.

Inference costs: The ongoing expense of running the model—every API call, every query, every generated response. This is your problem, and it never stops.

Here's the counterintuitive reality: inference costs typically dwarf training costs over time. Every time your monitoring system analyzes a social post, polls a model, or generates a report, the billing meter ticks.

The Agentic Multiplier

Modern AI monitoring systems aren't simple query-response tools. They're increasingly agentic—meaning they chain multiple AI calls together to complete complex tasks.

Consider what happens when an enterprise monitors brand sentiment across a product launch:

- Initial query to identify relevant discussions

- Entity extraction from each source

- Sentiment classification per entity

- Trend analysis across time series

- Executive summary generation

- Alert generation for negative signals

A single monitoring check might involve 5-20 model calls. Multiply by continuous monitoring across brands, products, regions—costs spiral quickly.

Organizations operating agentic AI systems report costs 10-20x higher than initial single-query prototypes suggested.

The Inference Cost Trap

Without rigorous monitoring, AI infrastructure budgets evaporate faster than anticipated.

The Runaway Query Problem

Early pilots look affordable. A few hundred queries per day, manageable API costs, promising results. Leadership approves scaling.

Then reality hits:

- More users discover the tool

- More use cases emerge

- Query volume grows exponentially

- Premium models get requested for quality

- No one thought to implement cost controls

We've observed organizations exhaust quarterly AI budgets in weeks after scaling without proper governance.

Model Selection Economics

Not all models cost equally:

| Model Tier | Typical Cost (per 1M tokens) | Use Case |

|---|---|---|

| Premium (GPT-4, Claude 3 Opus) | $15-60 | Complex analysis, executive outputs |

| Standard (GPT-3.5, Claude 3 Sonnet) | $0.50-3 | Routine classification, categorization |

| Open Source (Llama, Mistral) | Infrastructure only | High-volume, lower-stakes tasks |

Smart architectures route queries to the minimum viable model—but building this routing intelligence requires expertise that many in-house teams lack.

Build vs. Buy: The Real Numbers

The build vs. buy decision for AI monitoring involves numbers that frequently surprise leadership.

Typical In-House Build Trajectory

Year 1: Initial Development

- Engineering team: 4-6 FTEs at $200K loaded cost = $800K-1.2M

- Cloud infrastructure: $50-100K

- API costs for development: $30-50K

- Subtotal: ~$900K-1.4M (already exceeding the $600K "estimate")

Years 2-3: Maintenance Reality

- Ongoing engineering: 2-3 FTEs = $400-600K/year

- Model provider API updates (breaking changes 2-3x/year)

- New model integration (pressure to add Claude, Gemini, etc.)

- Security patches, compliance updates

- Subtotal: $800K-1.2M per year

5-Year TCO: $2-3 million

Commercial Platform Alternative

Year 1: Implementation

- Platform licensing: $100-150K

- Integration services: $50-100K

- Subtotal: $150-250K

Years 2-5: Operations

- Annual licensing: $100-150K/year

- Minimal integration maintenance

- Subtotal: $400-600K over 4 years

5-Year TCO: $550K-850K

The math is stark: building in-house often costs 3-4x commercial alternatives over a realistic time horizon.

Hidden Costs of Building In-House

Beyond the headline numbers, in-house development carries hidden costs that erode the business case.

Technical Debt Accumulation

In-house AI systems accumulate technical debt at alarming rates:

- Provider API changes require constant adaptation

- New models demand new integration patterns

- Prompt engineering evolves rapidly

- Vector database technologies shift

Without dedicated maintenance investment, systems become brittle within 18-24 months.

Opportunity Cost

Those 4-6 engineers building monitoring infrastructure aren't building product features, customer tools, or revenue-generating capabilities. For most enterprises, AI monitoring is infrastructure—necessary but not differentiating.

Talent Risk

AI engineering talent is expensive and mobile. Key personnel departures can cripple in-house systems, leaving organizations with undocumented codebases they struggle to maintain.

Multi-Provider Complexity

Enterprise AI monitoring requires integration with multiple LLM providers:

- OpenAI (GPT-4, GPT-4o)

- Anthropic (Claude)

- Google (Gemini)

- Open source models (Llama, Mistral)

Each provider has different APIs, authentication patterns, rate limiting, error handling, and pricing. Abstracting this complexity into a unified interface is non-trivial engineering—exactly the multi-model orchestration challenge that specialized platforms have already solved.

The Platform Advantage

Commercial AI monitoring platforms offer advantages that compound over time.

Pre-Built Multi-Model Integration

Platforms like AICarma provide single interfaces to 10+ models, handling the integration complexity invisibly. When Anthropic releases Claude 4 or Google updates Gemini, the platform absorbs the integration work.

Optimized Cost Architecture

Purpose-built platforms implement sophisticated cost controls:

- Intelligent query routing to lowest-cost viable models

- Caching layers that prevent redundant API calls

- Batch processing for non-urgent queries

- Real-time spending visibility and alerts

These optimizations often reduce inference costs by 40-60% compared to naive implementations.

Continuous Feature Evolution

Commercial platforms evolve continuously:

- New analysis capabilities

- Improved dashboards and alerting

- Additional data source integrations

- Enhanced competitor analysis features

In-house systems only evolve when internal engineering prioritizes improvements—which rarely happens once "maintenance mode" begins.

Enterprise-Grade Reliability

Monitoring systems that go down during crises are worse than useless. Commercial platforms offer:

- Multi-region redundancy

- 99.9%+ uptime SLAs

- 24/7 support

- Disaster recovery

Achieving equivalent reliability in-house requires significant additional investment.

Making the Decision

When does building in-house make sense?

Build When:

- AI monitoring is core to your business model (you're a monitoring company)

- Regulatory requirements prohibit third-party data processing

- You have dedicated, stable AI engineering teams with spare capacity

- You need capabilities no platform offers and can't get via partnership

Buy When:

- AI monitoring is infrastructure, not product

- Time-to-value matters

- Engineering capacity is constrained

- You need multi-model coverage immediately

- TCO discipline is important

For most enterprises—even large ones—buying specialized platforms delivers better outcomes than building. The organizations with successful in-house AI infrastructure are typically those for whom AI is the business, not a supporting capability.

The Integration Question

One advantage of commercial platforms often overlooked: many are designed as components that integrate into larger systems. For organizations building broader intelligence platforms, acquiring proven AI monitoring capabilities can accelerate roadmaps by years while reducing execution risk.

FAQ

What's a realistic budget for enterprise AI monitoring?

Commercial platforms typically range from $100,000-300,000 annually for comprehensive enterprise deployments. This includes multi-model access, dashboard tooling, and support. In-house builds should budget $500,000+ for year one with $200,000+ for ongoing maintenance—and expect overruns.

How do we control AI inference costs?

Key strategies include: intelligent routing to cost-appropriate models, caching identical or similar queries, batching non-urgent requests, setting per-user or per-team quotas, and real-time spending visibility. Most platforms offer these controls natively; building them in-house requires significant engineering.

Is open source a viable path to lower costs?

Open source models (Llama, Mistral) eliminate API fees but introduce infrastructure costs. Running models at enterprise scale requires GPU clusters, MLOps expertise, and security hardening. Total cost often approaches commercial alternatives—with more operational burden. Open source makes sense for specific use cases, not as a complete replacement strategy.

How do we evaluate platform vendors?

Key criteria include: number of models supported, quality of entity and sentiment analysis, dashboard and alerting capabilities, API flexibility for integration, pricing transparency, and enterprise security compliance (SOC 2, GDPR, etc.). Request TCO projections based on your expected query volumes.

Can we start building and switch to buy later?

Technically yes, but this is expensive. Resources invested in in-house development become sunk costs. Migration from custom systems to platforms requires additional integration work. The more pragmatic path: start with a platform, build only if specific needs truly can't be met.

The economics of AI monitoring favor buy over build for most enterprises. The organizations achieving fastest time-to-value aren't those with the largest engineering teams—they're those that recognized AI monitoring infrastructure as a commodity and focused their differentiation efforts elsewhere.